Every transaction, login, and data exchange is now part of an invisible race between financial institutions trying to protect value and fraud networks using automation, deepfakes, and AI to erode it. In this race, speed and context decide outcomes: whoever interprets intent faster wins.

Instead of reacting to static patterns, AI fraud prevention for banks learn continuously from behavioural, transactional, and network data. These systems detect anomalies, explain decisions, and adapt to new threats in real time. If your organisation is exploring how to build or validate such a system, our team can help. Request a 90-day AI Fraud POC plan to see how an enterprise-grade AI fraud architecture can deliver operational resilience and business impact.

Why banks need AI-driven fraud prevention now

The global financial system lost more than $480 billion to fraud in 2024, and losses are expected to climb further as digital payments dominate retail and cross-border transactions. Each innovation, like contactless cards, real-time payments, and open APIs, creates new opportunities for exploitation without fintech software development. These threats operate simultaneously across cards, deposits, credit, and digital channels. Manual or post-event detection can no longer be viable in a continuous, cross-channel environment.

Another reason is legacy rule-based systems. They were designed for predictable fraud, fixed patterns, repeatable behaviours, and human review cycles. Today’s adversaries exploit their rigidity.

Updating them requires human intervention, and each delay allows fraud to evolve unchecked. AI service for banking, by contrast, adjusts in near real time, learning from new patterns as they emerge. Legacy workflows rely on overnight or hourly batch processing. In instant payment systems, fraud can be executed and cleared before a rule fires. AI eliminates this gap through real-time scoring and decisioning.

Rules evaluate single transactions in isolation. They cannot see that a device, IP, and beneficiary are connected across multiple accounts. Without relational context, rule-based systems miss coordinated fraud and over-penalise outliers.

The result is a paradox: high cost, low accuracy, and growing exposure.

In economic terms, AI in business applications transforms fraud prevention from a cost centre into a revenue-protecting function, simultaneously reducing losses, operational overhead, and attrition caused by poor customer experience.

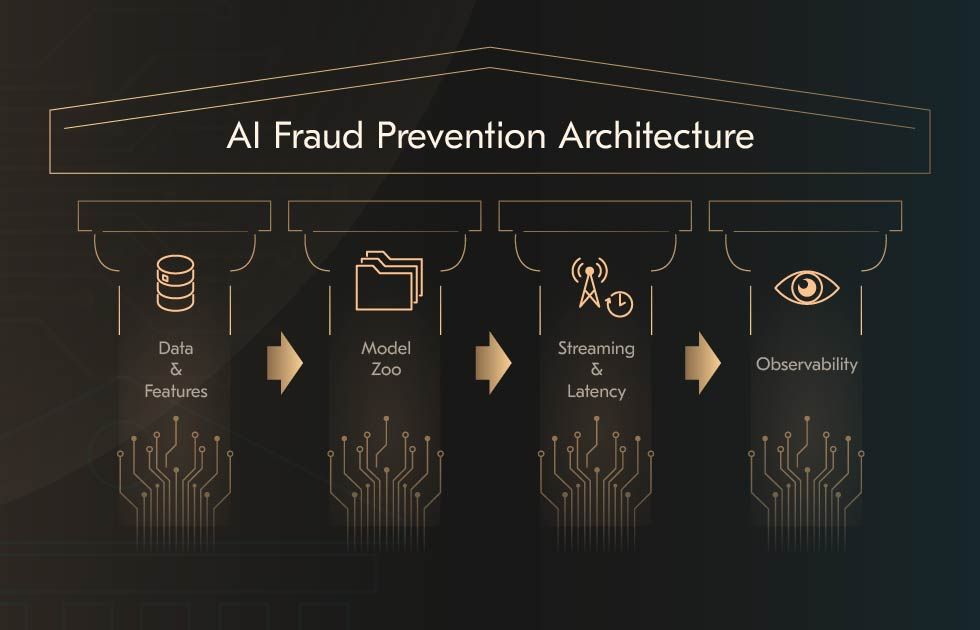

How the architecture for AI fraud prevention for banks looks like

Behind every successful fraud prevention program lies an architecture that processes billions of signals, interprets subtle behaviour patterns, and makes risk decisions faster. This system is a living architecture built on four interdependent pillars: data pipelines that capture context, models that adapt in real time, infrastructure that delivers instant responses, and observability frameworks that guarantee resilience and trust.

1. Data and features

AI-driven fraud prevention starts where traditional systems stop: at the data foundation. Instead of static transaction logs, modern architectures consume and interpret multimodal, streaming data. Core signals that power AI based fraud detection in banking:

Transactional intelligence. Temporal and velocity-based features uncover abnormal bursts of activity or micro-fraud patterns invisible to manual review.

Device and session identity. When multiple accounts interact from the same device or network, graph algorithms uncover relationships that rule-based systems miss.

Behavioural analytics. AI in banking interactions for fraud detection can track patterns in typing cadence, screen behavior, or navigation flow.

Geospatial and environmental context. AI engines flag anomalies such as impossible travel patterns, unfamiliar time zones, or remote IPs associated with known high-risk regions.

KYC and document data. At the onboarding level, document verification models authenticate IDs, proof of address, and biometric data.

2. Model zoo

Modern systems rely on a curated ensemble of algorithms optimised for fraud typologies and data modalities. Supervised learners are high-performing for structured financial data. They interpret historical fraud cases, learning the boundaries between legitimate and malicious behaviour. When deep learning for evolving patterns architectures excel at sequential data, they capture time-dependent changes in user behaviour. Unsupervised models are indispensable for zero-day fraud. They learn what “normal” looks like and flag deviations that suggest novel tactics, helpful for fast-moving schemes like social engineering or instant payment fraud.

In one banking partnership, Acropolium implemented a real-time scoring engine processing millions of events per hour with sub-second latency, behaviour analytics, and graph-based detection across all channels. This case illustrates how the model zoo, streaming design, and feature store must operate in tandem for a scalable production system.

3. Streaming and latency

A detection that arrives seconds late is already irrelevant. The architectural design must therefore guarantee real-time scoring, fast enough to block fraudulent activity before authorisation. Here’s how the data moves through the system: Every digital interaction flows through streaming frameworks. These event queues promise immediate availability for scoring and analytics. Microservices deploy models as independent endpoints, each optimised for latency and scalability. Pre-engineered features are fetched in real time from low-latency stores, eliminating expensive database joins or API calls.

4. Observability

Observability closes the loop between detection, learning, and compliance. Data drifts can silently erode model performance. Continuous drift detection monitors these real-time shifts, triggering retraining workflows when thresholds are breached. Some models require daily incremental updates; others undergo full retraining quarterly or upon regulatory review. Every dataset entering the pipeline passes through schema validation, completeness checks, and outlier filtering.

How to reduce false positives with the graph and behavior

Reducing false positives is one of the most persistent and costly challenges in fraud prevention. Every unnecessary alert increases operational workload, frustrates legitimate customers, and erodes trust in digital channels.

Feature examples

Modern fraud models expand beyond point-in-time attributes. They operate on relational depth and behavioural continuity, constructing dynamic profiles of accounts, devices, and counterparties.

Graph-native features that materially reduce false positives include:

Mapping how accounts, devices, beneficiaries, merchants, and digital identities interact over time. Stable, long-tenured relationships often carry implicit trust signals; sudden new links or transactional convergence patterns do not.

Identifying unusual connectivity. Fraud rings often show high-volume, multi-edge connectivity through shared devices, IPs, or beneficiaries; genuine high-volume users typically have diversified, established networks.

Evaluating the directionality and sequence of transfers. Legitimate flows show predictable cadence and geographic logic; laundering and mule networks reveal circularity, rapid relay patterns, and saturation points.

Behavioural analytics explains how users typically behave and flags meaningful deviations.

Behavioural baselines. AI continuously learns from transaction history, login timing, device usage, and navigation patterns to establish a behavioural signature for each user.

Micro-patterns in interaction. Subtle cues form a behavioural biometric that is extremely difficult to fake.

Anomaly scoring. Statistical techniques quantify deviations from standard patterns across multiple variables.

Geospatial consistency. Location-based features identify improbable travel speeds or inconsistent geolocations.

Case patterns

The integration of relational and behavioural analytics is particularly effective against fraud that spans multiple accounts or evolves. Mule accounts and coordinated ring networks are built to launder stolen funds or facilitate authorised push payment fraud. Once clusters are identified, AI assigns composite risk scores to the group rather than evaluating each account independently.

Account takeover, systems equipped with behavioural biometrics detect the subtle inconsistencies, a slower typing rhythm, irregular mouse movement, or a new device signature, long before the fraudster executes a transaction.

What are the bank fraud alerts and safe customer interactions?

Modern banking environments demand real-time, context-aware alerting. The quality of a fraud prevention system depends on how and when customers are notified. Fraud detection using AI in banking prioritizes communication pathways dynamically based on customer preferences, risk level, and the likelihood of successful intervention.  AI systems continuously monitor behavioural and transactional signals to trigger alerts within milliseconds. When an anomaly surfaces, the system must prioritise delivery channels based on urgency, device availability, and regulatory constraints:

AI systems continuously monitor behavioural and transactional signals to trigger alerts within milliseconds. When an anomaly surfaces, the system must prioritise delivery channels based on urgency, device availability, and regulatory constraints:

In-app and push notifications are the first line of communication. They reach authenticated users instantly within the digital banking environment and allow secure, two-way interactions.

SMS or messaging channels provide redundancy when the user is offline or unauthenticated.

Voice and IVR systems remain critical for high-risk or urgent scenarios. During calls, AI-powered voice analytics detect stress patterns, hesitation, or social-engineering cues.

Chatbots and NLP interfaces are trusted assistants. They analyse natural language across chat, email, and text to identify suspicious content.

Adaptive step-up authentication

AI introduces a shift from static security policies to adaptive, risk-based authentication. Instead of applying uniform verification for every user or transaction, the system evaluates contextual risk in real time and requests additional evidence only when anomalies warrant it.

This precision in applying friction relies on continuous behavioural baselining. When deviations occur, the AI system classifies the event’s risk level and decides whether to trigger step-up authentication.

Explainability in communication: what and how to explain to the customer

When a transaction is blocked or delayed, users expect an immediate, intelligible reason. The solution lies in controlled transparency, explaining outcomes in a human-readable way without revealing proprietary detection mechanisms. Modern systems powered by Explainable AI provide structured, compliant narratives for every decision.

This approach achieves three outcomes simultaneously:

Customer reassurance and clarity reduce anxiety and strengthen trust in digital channels.

Operational efficiency, clear justifications resolve most disputes without escalation, lowering call-centre load and investigation costs.

Audit readiness, every decision explanation is archived, providing regulators with a traceable record.

What compliance, security, and model risk management are needed?

Key requirements of GDPR/UK GDPR, PCI DSS, AML/KYC, PSD2 SCA

GDPR and UK GDPR require lawful and transparent processing of personal data. In fraud prevention, this means embedding privacy by design into every stage of model development. Personally identifiable information (PII) must be masked or anonymised before training or scoring. PCI DSS focuses on protecting payment card data. For AI fraud systems, compliance begins with data tokenisation and continues through encrypted data transfer, secure storage, and strict access control.

AML and KYC regulations demand continuous monitoring of customer identities and transactions. AI verifies ID documents through computer vision, detecting inconsistencies, and automatically flagging patterns consistent with money laundering or mule account behaviour. Under PSD2 and Strong Customer Authentication, financial institutions must verify user identity dynamically. AI models enable risk-based authentication, calculating a real-time risk score for each transaction based on device, geolocation, and behavioural context.

Model risk management and explainability

At the core is Explainable AI (XAI). Each decision must be traceable to the specific variables and risk factors that influenced it. Modern institutions implement interpretability layers using methods that deconstruct model outputs and reveal which features drove the risk score.

Equally critical is comprehensive documentation. Every version of a model, dataset, and decision rule must be logged and version-controlled. Leading banks now automate this through MLOps pipelines that capture real-time configuration changes, retraining intervals, and validation outcomes. Bias mitigation completes this cycle. Regular fairness audits check for demographic or geographic bias in model outputs.

Data protection

Data protection underpins every layer of fraud prevention from model training to live decisioning. The foundation lies in PII minimisation and anonymisation. Personal identifiers are tokenised or masked before they enter any analytic workflow.

Security continues with encryption and secrets management. All data in transit or at rest must be encrypted using modern protocols. Sensitive credentials are stored in dedicated secret vaults with automatic rotation policies. No human should handle raw credentials, and no unencrypted data should move through production pipelines.

Access must be governed through role-based controls and continuous authentication. AI systems must be hardened against data poisoning, model inversion, and adversarial attacks, with monitoring layers that detect anomalies in data streams or feature distributions.

How to measure the impact of AI in banking fraud detection

This section outlines the multidimensional framework for evaluating that impact.

Technical metrics

Technical metrics define the system’s analytical quality and real-time reliability. In fraud detection, precision and recall become integral indicators of model credibility.

Precision measures the proportion of flagged transactions that are genuinely fraudulent.

Recall quantifies the share of actual fraud correctly identified. A recall score above 0.9 indicates the system’s ability to capture even rare, low-value, or stealthy fraud incidents that legacy rules typically miss.

Latency measures the time between transaction initiation and risk score generation.

Alert delivery rate reflects the volume and relevance of fraud alerts. Continuous tuning of model thresholds and adaptive calibration keeps alert volumes sustainable and analyst workload predictable.

Business metrics

While technical metrics quantify system capability, business metrics express measurable financial savings, efficiency, and customer experience outcomes.

Prevented loss is the cornerstone metric, representing the monetary value of fraud intercepted before financial damage occurs. Well-calibrated systems can cut aggregate fraud losses. According to McKinsey, fraud detection using AI in banking can reduce financial fraud losses by up to 50%.

False positive rate directly influences customer experience and operational cost. Every unnecessary block or alert adds manual review time and erodes user trust.

Deflection and friction reduction capture the customer-side benefit of AI precision.

Cost-to-serve reduces fraud operations overhead, from fewer manual reviews and chargeback processes to lower investigation times.

Unit economics

Financial performance evaluation must extend beyond model accuracy to include lifecycle economics.

Total cost of ownership includes the entire lifecycle: data integration, infrastructure, licensing or cloud usage, continuous retraining, and model governance.

Time-to-value measures how quickly the system generates tangible benefits. The first measurable loss reduction in well-structured projects appears within 90–120 days, particularly once real-time scoring is fully activated.

Payback period typically ranges from 12 to 18 months, depending on transaction volume and fraud typology complexity.

Sensitivity analysis then validates model resilience.

Example ROI model: scenario analysis

| Metric / Scenario | Conservative | Expected | Aggressive |

| Target F1-score | ≥ 0.80 | ≥ 0.90 | ≥ 0.99 |

| Customer experience impact | Moderate improvement in trust | Significant improvement, seamless RBA | Market-leading CX, measurable loyalty uplift |

| Payback period | 18–24 months | 12–15 months | 9–12 months |

| Estimated ROI | 1.5× – 2× | 3× – 4× | 5× + return, depending on scale |

| Relative TCO investment | Lowstandard infrastructure, limited automation | Medium dedicated data science team and cloud optimisation | High GPU clusters, advanced analytics, full automation |

What to choose build vs buy and operating model?

The first consideration in any AI fraud prevention initiative is architectural independence. Commercial fraud platforms, offered by major cloud providers and fintech vendors, accelerate time to value by providing pre-trained models, rule orchestration layers, and APIs for integration with payment gateways. However, these platforms can introduce vendor lock-in, particularly when proprietary feature stores, data schemas, or model monitoring tools cannot be ported across clouds.

Custom-built systems, on the other hand, allow institutions to tailor every layer, from ingestion and feature engineering to explainability and risk scoring. They also enable seamless integration with existing KYC, AML, and case management systems, eliminating silos between fraud, compliance, and customer-support functions.

Difference between team and processes

Implementing an advanced system without a well-defined operating model leads to operational blind spots, inconsistent investigations, and delayed responses. A mature operating structure defines accountability across data, model, and response layers. The operating model revolves around RACI matrix. It means assigning responsibility and oversight across key domains:

Data governance: ownership of data lineage, quality controls, and access management.

Model lifecycle: accountability for model design, validation, and monitoring.

Operations and response: who acts when a fraud alert or model failure occurs.

Importance of SLA and support

Sustaining an AI-driven fraud prevention system requires service reliability and transparent measurement frameworks comparable to those used in mission-critical financial systems. Service Level Agreements and Service Level Objectives define how performance, accuracy, and reliability are monitored and governed.

Key SLOs typically include:

Model performance metrics: minimum thresholds for precision, recall, and false positive rate aligned with agreed business KPIs.

Latency and throughput: transaction scoring must consistently operate below predefined time limits.

Data pipeline uptime: streaming and feature store services availability above 99.9%.

Retraining cadence and response time: maximum duration between drift detection and retraining completion.

Implementation roadmap of AI fraud detection

Below is a one-year roadmap that captures how to design large-scale, compliant, and ROI-driven AI fraud prevention banking.

Below is a one-year roadmap that captures how to design large-scale, compliant, and ROI-driven AI fraud prevention banking.

0–90 days: Discovery and data readiness

The first quarter establishes the foundation: clarity of objectives, data quality, and model feasibility. The process begins with discovery and baseline assessment, where the institution performs a detailed analysis of the last 12–24 months of fraud incidents. With pain points mapped, the focus shifts to data readiness and feature engineering. High-performing fraud detection using AI in banking depends on rich, trustworthy data spanning multiple transactions, device telemetry, customer demographics, geolocation trails, and behavioural data.

Once data pipelines stabilise, the institution proceeds to the Proof of Concept (PoC). Here, multiple models are trained on historical data to compare detection lift against the legacy rule engine. Cross-validation and AUC-PR are used to validate model generalisation.

90–180 days: Pilot in production and monitoring

With a proven PoC, the next 90 days transition from research to operational reality. The phase starts with a pilot deployment in a controlled environment. The AI model runs parallel to existing fraud rules, scoring real-time transactions. This allows teams to fine-tune latency, evaluate throughput, and verify compatibility with the bank’s transaction systems and core payment rails. Integration of risk-based authentication ensures that legitimate customers face minimal friction; additional verification is triggered only when a transaction exceeds a calculated risk threshold.

The deployment quickly transitions into continuous monitoring and model management, where real-time dashboards provide visibility into key indicators: detection rate, approval rate, false positive volume, and estimated prevented losses.

180–365 days: Scaling, multi-channel integration, optimisation

In the final six months, the initiative transitions from pilot to enterprise-scale adoption. The first step is scaling and architectural optimisation. Models proven during the pilot are moved into full production across all payment channels. In parallel, the system evolves toward multi-channel and behavioural expansion. A unified decision layer begins correlating risk signals from all customer touchpoints so the system can recognise when a fraud journey starts in one channel and concludes in another.

The maturity phase concludes with governance and collaborative intelligence. Explainability frameworks are integrated into formal model governance under the EU AI Act and UK GDPR provisions, ensuring each model version is traceable and auditable.

Get started with AI fraud prevention implementation

Every transaction now carries a signal, not just a risk. The banks capable of reading those signals in real time define the new standard of digital trust. Fraud detection using AI in banking prevents direct monetary losses, optimises operational costs, preserves brand integrity, and sustains customer confidence. Banks implementing mature AI systems report measurable improvements in detection accuracy, auditability, and cost-to-serve. All of this can be achieved without compromising compliance with GDPR, PSD2 SCA, AML, or KYC obligations.

For banking organizations considering this transition, the most effective path begins with a focused proof of concept. An AI in banking fraud detection plan allows your teams to validate data readiness, benchmark models on historical fraud cases, and quantify the business impact through precision, recall, and avoided loss. Request a 90-day AI fraud POC plan to see how a modern, explainable, real-time architecture performs on your data.

![AI in Retail: [Use Cases & Applications for 2025]](/img/articles/ai-in-retail-use-cases/img01.jpg)

![How to Integrate AI into Your Business: [A Comprehensive Guide]](/img/articles/how-to-integrate-ai-into-your-business-a-comprehensive-guide/img01.jpg)