Organizations now automate more of their operations, but the tools available serve very different purposes. RPA works best when a process is stable, predictable, and entirely rule-based, such as copying data between systems or processing invoices. Chatbots are suitable when the interaction is conversational and the goal is to answer straightforward questions or route users efficiently. AI agents become necessary when the workflow requires interpretation, coordination across systems, and decisions that depend on context rather than predefined logic.

The shift between agentic AI vs RPA tools usually happens when a process moves from executing steps, to communicating information, to resolving an outcome end-to-end. If your organization is assessing where to automate next, the decision often comes down to how much variability the process has, whether judgment is required, and how many systems are involved. A structured audit can clarify which areas are appropriate for RPA, which should remain conversational, and where AI agents can provide measurable gains in efficiency and resolution quality.

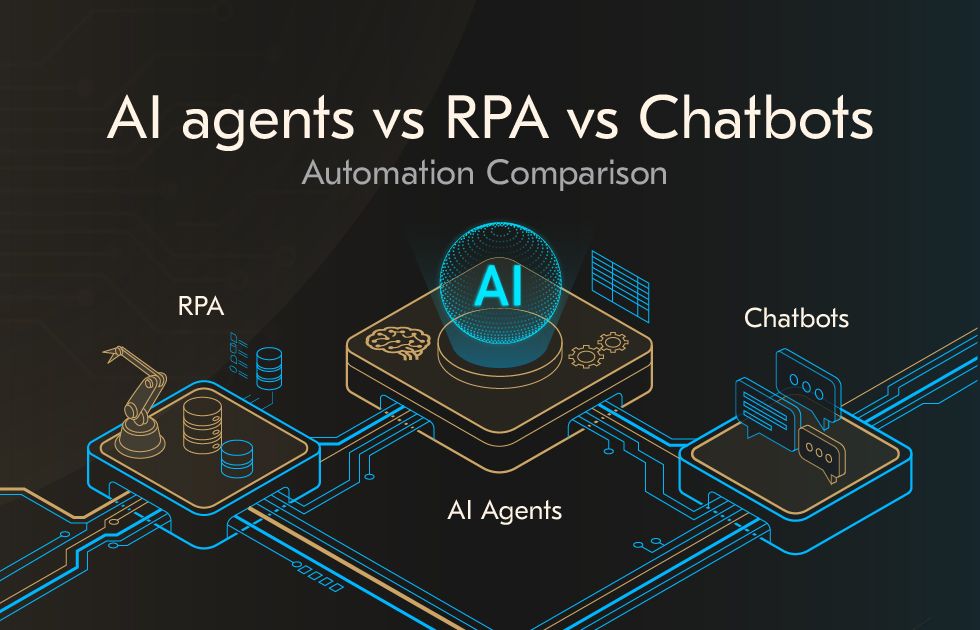

What AI agents vs RPA vs chatbots mean?

What is robotic process automation?

RPA automates repetitive, structured tasks by mimicking user interactions in software systems. It follows explicit step-by-step instructions and relies on deterministic rules, executing the same sequence reliably each time.

What are chatbots?

Chatbots automate conversation. They respond to user queries using scripted flows or intent recognition and are effective when the goal is to provide quick, consistent answers to straightforward questions. They operate within a narrow contextual window and typically do not retain memory across interactions. A chatbot may confirm that a customer qualifies for a refund, but it cannot execute the refund, update the ledger, and notify accounting. It informs; it does not resolve.

What are AI agents?

AI agents handle goal-driven, multi-step work that requires understanding context, interpreting information, making decisions, and taking action across systems. They combine a reasoning model (typically an LLM) with access to tools, memory, and policies. The above function allows the agent to break down the goal into steps, call APIs, read documents, update records, and verify outcomes. Unlike an LLM chat interface, an agent has agency: the ability to plan, act, and adjust its approach based on feedback.

The more a process requires interpretation, variation handling, and coordination across systems, the closer it moves to agent territory. The more a process is fixed, structured, and stable, the more it belongs to RPA and chatbots.

Where is the line between RPA, chatbots and AI agents?

The distinction between RPA, chatbots, and AI agents becomes clear when examining how each system handles variability, context, decision-making, and cross-system execution. The separation is based on the type of work being automated: whether it is repetitive and rule-driven, conversational and guided, or cognitive and outcome-oriented. The more a workflow requires interpreting unstructured data, reasoning under uncertainty, and coordinating across multiple applications, the further it moves along the spectrum toward agent-based automation.

| Criterion | RPA | Chatbots | AI agents |

|---|---|---|---|

| Task type | Repetitive, clearly defined steps | Structured or semi-structured conversation | Multi-step goal completion with reasoning |

| Environment variability | Low; stable UI and rules required | Low to moderate; relies on predefined intents | High; able to adapt to changing conditions and incomplete inputs |

| Decision-making | None; deterministic workflow | Limited; intent classification and scripted responses | Contextual reasoning and policy-bounded judgment |

| System integration | Often UI-driven; APIs optional | Typically limited to one or two data sources | Native multi-system orchestration via APIs and tool calls |

| Total cost of ownership | High maintenance when environments shift | Low for narrow scopes; escalates with complexity | Higher initial setup, lower ongoing maintenance due to learning and adaptability |

| Deployment speed | Several months (process mapping, scripting, testing) | Days to weeks for narrow cases | Days to weeks when policies, guardrails, and integration points are in place |

| Security and compliance | Strong audit trails; predictable behavior | Suitable for low-risk or informational tasks | Requires RBAC, policy enforcement, logging, and controlled execution |

| Observability and control | Fully deterministic behavior, easy to trace | Interaction logs only; limited transparency | Requires continuous monitoring, governance, and auditable execution records |

The distinction between agentic AI vs RPA becomes clearer when mapped across two dimensions: process stability and cognitive complexity.

Processes that are stable, repeatable, and rely on structured inputs align naturally with deterministic automation such as RPA.

As the cognitive demands of a task increase, automation must incorporate conversational understanding or reasoning capability, shifting toward chatbots or agentic AI.

At the lowest end of cognitive complexity, RPA is most appropriate. It reliably executes repeatable tasks where the sequence of actions and data structures do not vary, such as form processing or system reconciliation. Its strength lies in precision and traceability, but it cannot interpret context or adapt if the workflow changes.

Chatbots occupy the space where the task is primarily conversational and the goal is to deliver quick, consistent responses to common questions. They are well-suited to structured, narrow domains, resetting passwords, providing order statuses, or answering FAQs. Chatbot development helps to handle dialogue, not reasoning or execution across systems.

As cognitive complexity increases and workflows require understanding unstructured information or deciding how to proceed, hybrid models emerge: chatbots augmented with APIs, document understanding, or workflow triggers. At the upper end of cognitive complexity and coordination requirements are AI agents. They are designed to interpret context, plan multi-step actions, and execute tasks across multiple systems. They use goal-oriented reasoning models. The outcome places agentic AI vs RPA in workflows that were previously resistant to automation.

What are the enterprise use cases for AI agents?

The value of agents becomes visible when workflows require interpretation, coordination, and action. The following domains illustrate where agents can be introduced in a focused, controlled way to address real operational constraints.

IT automation

In IT operations, incidents rarely follow identical patterns, and the underlying cause is often inferred from logs, metrics, and recent changes. For incident management, an agent can collect diagnostic data from observability platforms, compare the current system state against standard patterns, and present a recommendation or initiate corrective action when the risk is low.

Configuration autocorrection follows a similar pattern: the agent monitors configuration drift, identifies mismatches between expected and applied settings, and either corrects them or routes them for approval. The expected outcomes in these scenarios include reduced time to resolution, fewer repetitive tickets, improved consistency in change handling, and less manual coordination across teams.

Operational processes

Processing a claim, evaluating a loan request, or completing an onboarding workflow requires reading documents, validating details, gathering information from multiple internal systems, and taking action in the correct order. An agent can form a plan for these sequences, interpret documents using language understanding rather than fixed templates, and complete each step while adhering to policy.

In multi-system orchestration, an agent coordinates interactions across platforms that were not originally designed to interoperate. For example, in a transportation and logistics environment, AI-driven orchestration has been applied to route planning, carrier management, and exception handling, improving cycle-time reliability and reducing manual intervention across dispatch, billing, and load-tracking systems.

Revenue operations

Agents can reduce this friction by maintaining context about the customer across systems and applying reasoning rather than scripted logic. In data enrichment, an agent can aggregate information from CRM, product usage telemetry, support history, and external sources, creating a more complete and current view of the customer. During proposal preparation, the agent can draft content, align offers with prior interactions, and ensure that internal pricing and approval rules are adhered to.

Task coordination across CRM, CPQ, and billing systems benefits from the agent’s ability to update multiple systems in sequence, track dependencies, and identify missing data before it causes a downstream failure. According to McKinsey, AI-driven personalization can increase revenue by 5–8% and improve customer satisfaction by 15–20%.

When to stay with RPA or chatbots?

Not every process benefits from autonomous agents. In many organizations, RPA vs AI chatbots continue to deliver reliable, efficient results when the work is predictable and the inputs are well-defined.

When to choose RPA

RPA excels in environments where workflows are stable and data is structured. Its strength lies in deterministic execution: given the same input, the same output is always produced.

RPA is most suitable when the user interfaces involved are stable and unlikely to change frequently. Because RPA interacts directly with screens or form fields, even minor UI adjustments may require reconfiguration. In organizations where systems are mature and interfaces remain steady, RPA can operate for long periods with minimal intervention. Tasks such as invoice indexing, data synchronization between well-defined systems, and routine record updates benefit from this predictability.

When to choose AI chatbots

Chatbots provide value when the objective is to offer quick, consistent access to information rather than to complete multi-step tasks. They are effective in situations where a user’s request can be resolved within the scope of predefined responses or structured conversation flows.

Many customer inquiries fall into this category: checking order status, understanding policy conditions, navigating account settings, or locating support resources. Chatbots can operate without integration into backend systems, relying instead on knowledge bases or static data sources. When a process does not require execution within enterprise applications, a chatbot can provide a clear, efficient pathway without the operational overhead of agent-based automation.

Where the objective is routing, a chatbot can provide structure without requiring reasoning. If complexity increases or the situation involves judgment, escalation to a human or to an agent workflow can be layered on top rather than replacing the chatbot itself.

In other words:

When the work is repetitive, rule-based, and tied to stable interfaces, RPA should remain in place.

When the task is conversational and limited to providing information or triaging requests, a chatbot is sufficient.

Only when the workflow involves interpretation, multi-step reasoning, and actions across multiple systems does an agent become the appropriate choice.

How to choose between RPA vs AI agents vs chatbots: Decision framework

Choosing the appropriate automation approach requires clarity on how a process behaves, what data it relies on, and how decisions are made within it. RPA vs AI chatbots remain effective when the workflow is predictable and the inputs are well-structured. AI agents are most useful where the process involves interpretation, multi-step decisions, or interaction across multiple systems. The goal of the decision framework is to determine where each approach contributes meaningful value and where introducing autonomy can improve operational performance.

The following questions help assess the suitability of each automation method. The intention is to evaluate characteristics of the process, rather than the capabilities of the tools themselves.

How variable is the process from case to case?

Does the workflow span multiple systems or data sources?

Is there API access to the systems involved, or only UI interaction?

How structured is the input data (forms and fields vs. emails, documents, or messages)?

Does the task require judgment, contextual interpretation, or exception handling?

Are there regulatory or audit requirements that require traceable decision outputs?

What level of escalation is acceptable if the task cannot be completed autonomously?

Are there confidentiality or data segmentation considerations that must be enforced?

What are the acceptable latency and responsiveness expectations?

What is the budget tolerance for ongoing maintenance rather than only deployment?

Clear answers to these questions reduce uncertainty and make the trade-offs visible. Where variability is low and inputs are structured, RPA remains suitable. Where the main requirement is conversational access to information, chatbots are sufficient. Where interpretation, planning, and adaptive action are needed, agents provide an advantage.

A practical dividing line emerges when evaluating task requirements: If a workflow requires multi-step reasoning and coordinated actions across two or more systems, and the available data includes unstructured or incomplete elements, an AI agent is likely the more effective approach.

How to evaluate Total Cost of Ownership of RPA vs AI

Cost considerations extend beyond initial deployment. A sustainable automation model must account for how the environment changes over time and how often maintenance is required.

Key components in TCO evaluation include:

Licensing and execution: RPA typically involves per-bot license fees and orchestrator costs; AI agents may involve model usage or subscription fees tied to interaction volume.

Infrastructure and implementation: RPA may require extensive development for each workflow. AI agents require integration and access configuration, but often less workflow scripting.

Support and maintenance: RPA incurs ongoing costs whenever interfaces or business rules change. Agents require tuning and feedback rather than full redevelopment.

Environmental variability: RPA may become brittle when interfaces change; agents are designed to adapt to variation within defined policy constraints.

Cost of errors and recovery: RPA and agents require escalation pathways. Escalation and rework costs correlate strongly with customer satisfaction and operational reliability.

Taken together, TCO is not determined solely by licensing but also by the cost of sustaining the solution as processes evolve. The more variability and interpretation a task requires, the more cost-effective it becomes to introduce autonomy rather than increase the volume of fixed-rule automation.

What are the architecture and integrations of RPA vs AI?

Shifting from scripted automation to agent-based systems requires a change in how logic, data access, and oversight are structured. Unlike RPA or conventional chatbots, which rely on predefined sequences or intent-response patterns, AI agents operate through layered reasoning and adaptive execution. Their architecture is designed to plan, coordinate actions across systems, and adjust based on context. To deploy them effectively, the AI integration approach and governance controls must be explicit, transparent, and aligned with operational boundaries.

Goals and plans

At the top layer, the agent interprets the objective and determines how to achieve it. Rather than following a fixed set of steps, it forms a plan by breaking a high-level instruction into discrete actions and evaluating conditions as it proceeds. This planning and execution loop enables the agent to handle variation without needing new scripts or workflows each time circumstances change. RPA and chatbots, in contrast, rely on deterministic logic or predefined branching patterns and do not adapt when the environment or inputs shift unexpectedly.

Tools

An agent must interact with systems to complete work. Sometimes it involves selecting and invoking tools or APIs to retrieve data, update records, initiate workflows, or carry out calculations. The orchestration of these tools is dynamic; the agent can decide when and why to call a specific interface, rather than following a static sequence. Traditional RPA automates interface-level actions, usually by emulating clicks or form inputs, while chatbots typically pass requests to a downstream system rather than initiating multi-step operations themselves.

Memory and context

Context continuity is a core differentiator. Agents maintain both immediate conversational context and longer-term history, allowing them to adapt responses, reference prior interactions, and identify when additional clarification is needed. Chatbots often lose context between turns or sessions, and RPA has no contextual model at all.

Policies and constraints

To ensure the agent operates within organizational boundaries, policy layers provide structured limits on what can be done, where, and under what conditions. These constraints may include access scope, approval requirements, redaction rules, or domain-specific compliance checks. Access should follow the principle of least privilege. Agents receive only the roles required to complete specific actions, with read/write scopes separated where possible. Sensitive operations, such as financial adjustments, contract approvals, or data deletions, should require explicit human confirmation or workflow-based approval.

Observability

Full transparency into decisions and actions is essential. Every step taken by the agent should be logged and inspectable for operational troubleshooting and for compliance. Observability also supports refinement: reviewing transcripts and execution traces enables teams to adjust prompts, improve policies, or clarify instructions. Without this, autonomy becomes difficult to evaluate or correct. Practical governance configurations may include:

Requiring human approval for irreversible actions (refunds, account changes, contract signatures).

Action logs that record the prompt, reasoning summary, tool calls, and results.

Version-controlled prompt templates and policy files stored in Git or a configuration registry.

Regular review cycles of agent transcripts to refine boundaries and prevent instruction drift.

Integration patterns

Governance is not optional in agent-based systems. Each action the agent performs should be logged with a timestamp, input, output, and reasoning context to allow audit and post-event review. Audit trails support operational troubleshooting, compliance reporting, and trust in autonomous behavior. Escalation logic should be explicit: when uncertainty exceeds a defined threshold, control shifts to a human operator with full context carried forward.

Integrating agents into enterprise environments relies on stable, well-defined interfaces and coordination models.

API-first interaction: Agents perform best when they can work directly with APIs rather than navigate user interfaces. API-based integration provides a consistent structure, precise access control, and lower maintenance overhead when systems evolve.

Event-driven workflows: Agents can be triggered by events rather than user requests—for example, a new support ticket, a contract reaching a specific review stage, or a change in system status. In this model, the agent evaluates context and determines the next steps. If a task requires structured execution, the agent may delegate specific actions to RPA while retaining responsibility for decision sequencing.

Human escalation: When the agent encounters an unclear situation, conflicting data, or a task with legal or customer sensitivity, it should transition control to a human. The quality of this handoff matters. Context preservation, transcript transfer, and clear reasoning markers reduce rework and prevent frustration.

Policy-as-code: Operational rules and constraints should be encoded in a form that is inspectable and consistent across environments. Version control, structured configuration, and environment scoping ensure that the agent behaves predictably and that changes are deliberate and traceable.

How to migrate from RPA or chatbots to AI agents

Moving from rules-based automation to agent-based systems is a structural change in how work is coordinated and decisions are made. RPA and chatbots excel when tasks are predictable and interfaces are stable, but they struggle as variability increases. AI agents address this gap by planning, evaluating, and acting across systems. Yet autonomy requires control. According to a recent McKinsey report, organizations adopting automation achieve efficiency gains of up to 40%. The migration must be deliberate, observable, and aligned with the operational environment rather than driven by novelty.

1. Audit

The starting point is understanding what actually happens in current workflows. A process audit maps how information moves between systems, where decisions are made, and which steps depend on human judgment. The audit also examines how data is accessed, since agents require consistent, policy-aware retrieval and action interfaces.

2. Prioritize

Once the landscape is visible, prioritization determines which workflows merit early transition. Here, sequencing matters. Choosing high-frequency processes with recurring decision points creates meaningful impact while keeping scope contained. The goal is to automate everything at once and focus on establishing a sustainable pattern for introducing, reviewing, and expanding agents. Prioritization also aligns expectations: where autonomy is beneficial, where oversight is necessary, and where traditional automation should remain unchanged.

3. Create Proof of Concept for one or two processes

A focused proof of concept provides a controlled environment to evaluate how agents interact with real data and existing systems. Limiting the scope to one or two workflows allows performance and reliability to be measured without operational exposure. During this phase, teams observe how the agent interprets instructions, handles ambiguity, and escalates when needed.

4. Define control metrics

Measuring effectiveness requires a shift in how outcomes are evaluated. Traditional automation metrics focus on completion and throughput; agent-based automation must also account for autonomy, decision quality, and escalation behavior. Metrics such as resolution rate, step accuracy, policy alignment, and recovery from uncertainty indicate whether the agent is behaving as intended. Logging and observability are non-negotiable; the ability to trace how a decision was made is essential for refinement, auditability, and operational trust.

5. Deploy

Scaling beyond pilot includes limiting write access, enforcing policy checks, and introducing staged rollouts. Deployment progresses from constrained environments to broader operational use as reliability improves. The objective is to maintain predictability while increasing the scope of autonomous execution.

6. Train the operator

As agents assume operational tasks, the role of the human operator evolves. Instead of constructing scripts or step logic, operators review transcripts, adjust guidance, and shape how the agent understands context. Over time, the human provides direction in edge cases and calibrates tone and interpretation, while the agent handles procedural tasks and retrieval.

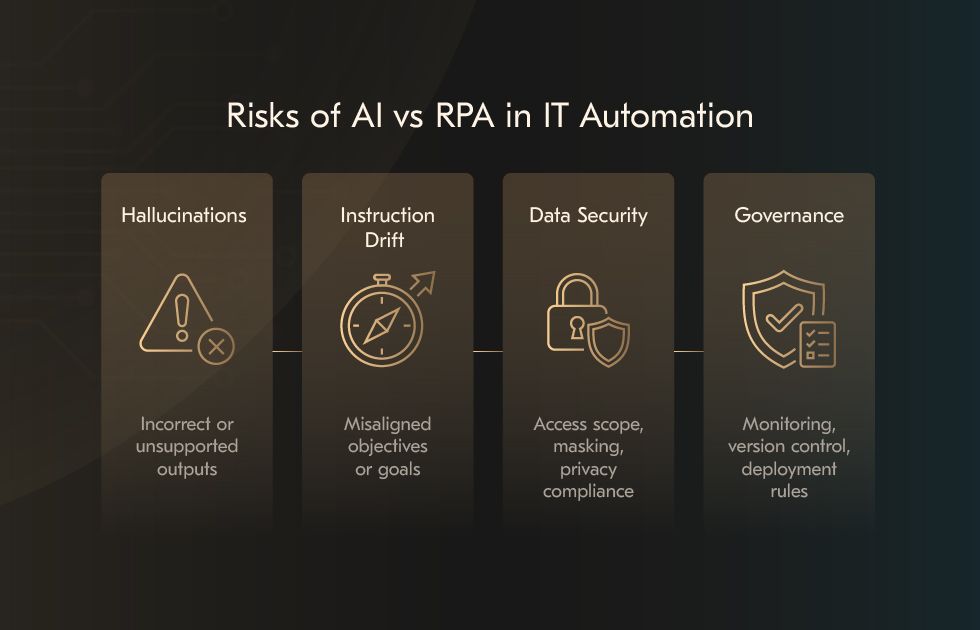

What are the risks AI vs RPA for IT automation?

The shift from rule-based automation to systems that interpret context and make decisions introduces a different risk profile. AI agents rely on language models, external tools, and adaptive behavior.

Hallucinations

Generative models may produce responses that appear coherent but are incorrect or insufficiently supported by data. When an agent is allowed to act on those outputs, the risk extends beyond inaccurate messaging and can influence system changes or customer interactions. These errors are more likely when prompts are underspecified or when the underlying data context is incomplete. Structured validation steps, deterministic retrieval mechanisms, and content verification layers help maintain accuracy.

Instruction drift

Instruction drift occurs when an agent interprets objectives too narrowly or pursues a goal without understanding contextual constraints. This risk increases when tasks are ambiguous or when agents operate on high-level instructions without policy scaffolding. Clear task decomposition, explicit constraints, and intermediate checkpoints can reduce misaligned behavior.

Data security

AI agents typically operate across multiple systems, requiring access to internal data and functional interfaces. Without careful scoping, this can expand the security surface area. Role-based access control, separation of read and write permissions, and environment segmentation should be standard. Customer or employee data must be processed in compliance with organizational privacy frameworks and applicable regulations.

The design should follow the principles of privacy by design and data minimization. Agents should only process the fields necessary for a given task, avoid storing unnecessary personal data, and apply masking or redaction where required. When interacting with customer or employee data, the agent must comply with the organization’s privacy framework and relevant regulations.

Governance

As automation scales, unmanaged deployment of autonomous components can lead to inconsistent behavior and operational fragmentation. Ensuring that agent behavior is monitored, versioned, and reviewable is essential. Every action and decision path should be logged in a form that allows post-event reasoning. Performance and error metrics should feed back into model refinement rather than requiring manual retraining. Governance practices should define who can deploy, modify, approve, and decommission agents to avoid uncontrolled proliferation.

How to assess performance and ROI across RPA vs agentic AI

The metrics discussed for generative AI vs RPA provide a grounded approach to evaluating automation outcomes. They apply across RPA, chatbots, and AI agents, but the degree of improvement varies depending on task complexity and autonomy. The most consistent drivers of value come from reduced manual effort, faster task completion, and more stable service performance, particularly in high-volume workflows.

Core business indicators typically assessed include:

Cost reduction in labor effort, rework, and routine processing

Throughput and productivity improvements across repetitive tasks

Customer satisfaction measures such as CSAT and NPS

Overall return on investment relative to deployment and operational costs

Some organizations have reported meaningful financial impact. Productivity gains often stem from fewer manual handoffs, automated data gathering, and decreased error rates. Customer satisfaction improvements tend to follow shorter wait times and more consistent resolution outcomes. RPA deployments have historically shown payback within a year, and similar timeframes are now observed in agent-based implementations, though results depend on process variability and integration maturity.

Service interaction

Average Handle Time is a central operational measure. AI agents can reduce handling time by collecting context automatically, summarizing conversation history, and performing follow-up steps directly in systems. Some implementations have reported substantial reductions in handling effort for frequently recurring cases. Service Level performance often improves due to consistent response speed and 24/7 operation.

A defining measure for modern automation is the proportion of cases completed without escalation. Earlier chatbot deployments generally focused on deflection or routing. AI agents are designed to complete multi-step workflows when data access and organizational policies allow. Reported resolution rates vary widely: some systems resolve a majority of inbound inquiries, others provide partial automation that still reduces workload.

Maintenance effort

The long-term efficiency of automation depends on how easily systems can adapt to changes. RPA is effective when interfaces and task patterns remain consistent, but requires rework when forms, UI layouts, or workflow steps change. Traditional chatbots require regular updates to dialogue rules and training data.

AI agents typically require less manual revision. They can be deployed more quickly, adjusted with fewer configuration steps, and improve behavior through feedback. In various documented comparisons, the development effort for agent-based workflows has been significantly lower than for functionally equivalent RPA automations, and some agent deployments have gone into production within a week. Adaptation to evolving workflows is also smoother, as adjustments are based less on scripted logic and more on contextual interpretation.

Taken together, these metrics help determine where RPA vs AI automation method is most suitable. RPA remains appropriate for structured, repetitive tasks. Chatbots remain useful for straightforward inquiries that do not require system-level action. AI agents are particularly effective when workflows span multiple systems, involve incomplete or variable inputs, or require context-based decision-making.

Endnote

AI agents are not a replacement for every existing automation initiative, but they redefine what can be automated when processes involve fragmented systems, incomplete data, or decisions that cannot be fully scripted. The practical value lies in determining where RPA and chatbots remain sufficient, and where introducing agents can deliver measurable improvements in cycle time, accuracy, and operational resilience.

A structured assessment of workflows, data access, compliance requirements, and escalation paths is essential before scaling. If you are evaluating where agents fit within your automation roadmap, we can conduct a focused process audit or discovery workshop to define the right integration path and expected ROI - Schedule a consultation with us.

For teams that want to evaluate their processes first independently, we’ve prepared a concise selection framework summarizing decision criteria, system-readiness factors, and governance considerations. Get checklist here for your needs.

![How to Integrate AI into Your Business: [A Comprehensive Guide]](/img/articles/how-to-integrate-ai-into-your-business-a-comprehensive-guide/img01.jpg)

![AI in Retail: [Use Cases & Applications for 2025]](/img/articles/ai-in-retail-use-cases/img01.jpg)

![Integrating Artificial Intelligence for Business Applications [A 2025 Guide]](/img/articles/artificial-intelligence-for-business-applications/img01.jpg)