Most Large Language Models (LLMs) already “work.” They generate fluent text, answer general questions, and demonstrate impressive reasoning. Yet in real applications, this is rarely enough. Models that perform well in demos often fail when they are expected to use precise terminology, adhere to strict formats, or remain consistent across thousands of similar requests.

LLM fine-tuning eliminates this disconnect. It uses a general-purpose foundation model and trains it to operate within specific boundaries, terminology, structure, decision rules, and acceptable responses. Hence, its outputs become predictable rather than merely plausible. Enterprises benefit from more predictable responses, fewer hallucinations, and behavior that holds up under real operational conditions.

If you’re evaluating whether fine-tuning is the right approach for your use case, or want to understand the effort, data, and infrastructure required to do it properly, Acropolium can help. Explore our LLM customization services to see how we tailor foundation models for real workflows, or get in touch to discuss your requirements and the fastest path to a production-ready model.

This article explains what fine-tuning LLM is, how to fine tune an LLM, and what are the advantages and challenges of adoption and how to avoid them. It will guide us through how fine-tuning fits into modern AI systems and how it turns general language models into reliable, production-ready components.

What is LLM fine-tuning?

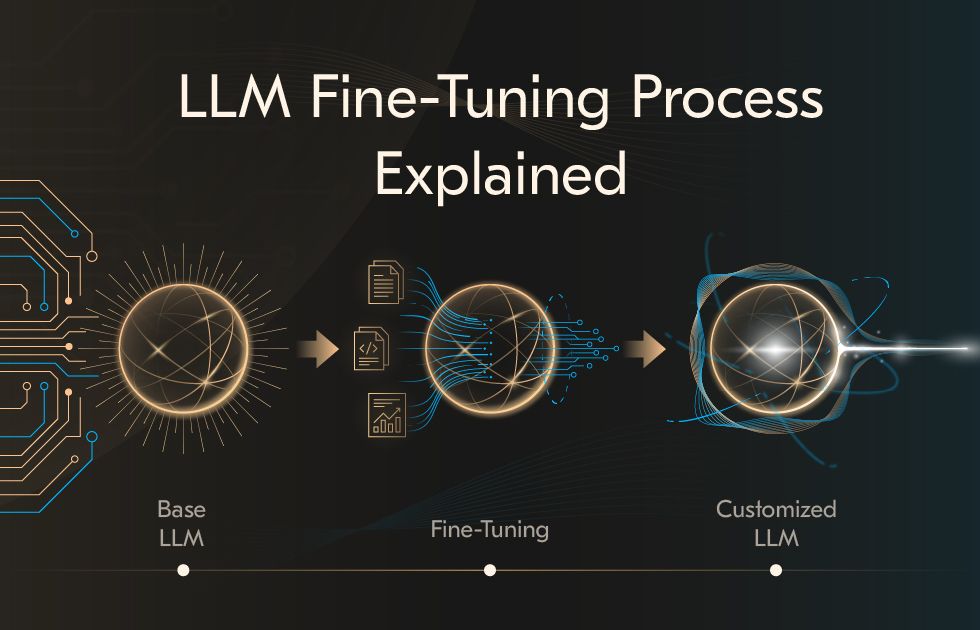

LLM fine-tuning is the process of adapting a pre-trained foundation model already trained on massive, heterogeneous text corpora to perform reliably within a specific domain or task. Instead of learning language from scratch, the model builds on its existing linguistic and semantic representations through transfer learning, refining its behavior using a smaller, carefully curated dataset. The outcome is: a system that applies general language understanding in a controlled, context-aware way to problems such as clinical documentation, legal analysis, risk assessment, or domain-specific classification.

The primary objective of fine-tuning is to convert probabilistic language generation into task-aligned behavior. In practical terms, enterprises improve consistency, reduce ambiguity, and align outputs with domain rules and expectations. Fine-tuned models typically achieve higher accuracy on narrow tasks, often allowing smaller or open-source models to outperform larger general-purpose systems when evaluated on domain-specific workloads.

Fine-tuning LLMs addresses a structural limitation of foundation models. These models are designed to be generalists. They are optimized to perform reasonably well across countless topics, styles, and intents, but they lack strong preferences about terminology, structure, or decision boundaries. Fine-tuning introduces those preferences. It teaches the model how to use language in a specific operational context.

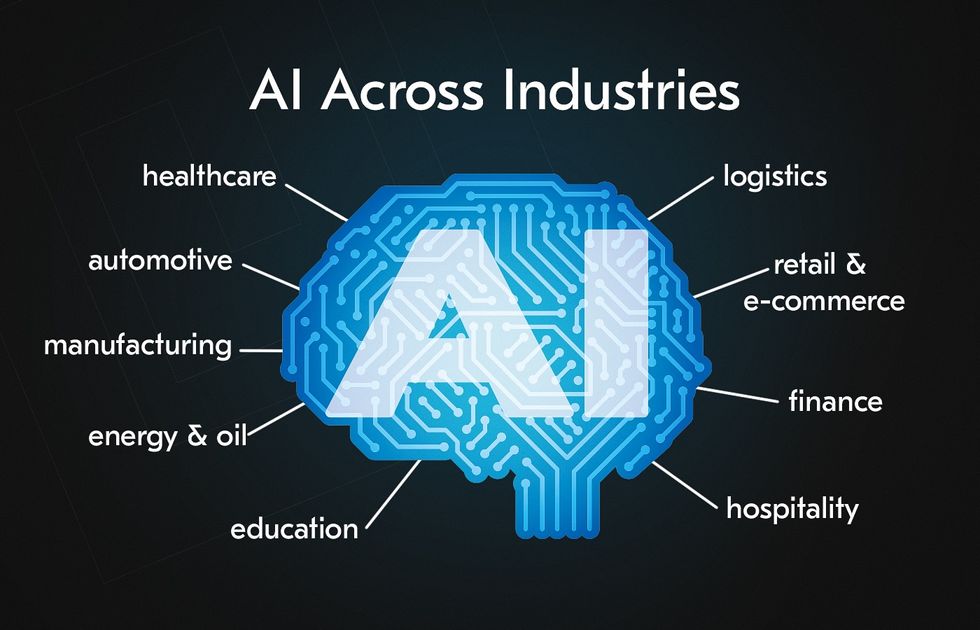

Why is fine-tuning critical for business use cases?

Domain-level precision where generic models fall short: Fine-tuning allows models to internalize this context so that outputs reflect how language is actually used in medical documentation, legal reasoning, financial analysis, or internal operational workflows.

Higher reliability in high-impact scenarios: Fine-tuned models learn task-specific patterns and constraints, which significantly reduce inconsistent outputs and speculative responses.

Lower operating costs through specialization: Instead of relying on large, general-purpose models for every task, fine-tuning enables smaller or open-source models to deliver comparable or better results on focused workloads.

Stronger data control and regulatory alignment: Fine-tuning supports deployment strategies that keep sensitive data within controlled environments.

Operational readiness for autonomous workflows: When organizations fine tune LLM, they enable algorithms to interact with internal tools, APIs, and systems reliably.

Why AI adoption needs LLM fine-tuning?

More precise behavioral boundaries: Fine-tuning narrows the model’s response space, reducing uncertainty about what constitutes an acceptable output.

Improved generalization within a defined scope: A well-fine-tuned model learns patterns that generalize across variations of the same task.

Reduced reliance on prompt engineering: Encoding expectations into model weights decreases sensitivity to prompt phrasing. This simplifies multi-modal AI integration system design and lowers the risk of prompt drift breaking downstream behavior.

Better alignment with evaluation and monitoring frameworks: Fine-tuned models produce outputs for multi-model AI integration that are easier to validate against structured metrics, schemas, and behavioral tests.

More efficient use of computational resources: Parameter-efficient fine-tuning techniques allow models to adapt without retraining all weights, preserving base capabilities.

How LLM fine-tuning works

It starts from a pre-trained foundation model

Every fine-tuning workflow begins with a foundation model that has already absorbed broad linguistic patterns through large-scale pre-training. This phase equips the model with grammar, syntax, and general world knowledge, but it does not impose strong preferences about how that knowledge should be applied in specific workflows. As a result, pre-trained models tend to be flexible but inconsistent. Fine-tuning builds on the learned weights from this stage, treating them as a stable baseline and incrementally adjusting behavior rather than relearning language fundamentals.

It defines behavior through targeted training data

Once a base model is selected, the focus shifts to data. Fine-tuning data defines expectations. Training examples are structured as input–output pairs that demonstrate how the answer should be expressed. Cleaning, normalization, and tokenization ensure the model sees consistent patterns during training. In practice, a small, well-curated dataset that mirrors real usage often produces better results than a large but loosely defined corpus. When labeled data is limited, carefully controlled synthetic examples can be used to extend coverage without distorting intent.

It learns from explicit supervision

Supervised learning fine-tuning is the most common mechanism for adapting LLMs. During training, the model generates an output for each input example and compares it to the expected response. A loss function measures the difference between the two, and optimization algorithms adjust the model’s parameters to reduce that error. Over successive iterations, the model internalizes task-specific vocabulary, formatting rules, and reasoning patterns.

It aligns outputs with human judgment

Some qualities cannot be fully captured by labeled examples alone. Reinforcement learning with human feedback addresses this gap by incorporating qualitative preference signals. The model produces multiple candidate responses, which human reviewers evaluate based on criteria such as clarity, safety, and usefulness. These preferences are used to train a reward model that guides further optimization.

It adapts efficiently through transfer learning approaches

Fine-tuning does not always require updating all model parameters. Instruction tuning teaches the model to follow diverse natural-language commands, improving general task compliance. Domain adaptation reinforces industry-specific language and conventions. Parameter-efficient fine-tuning methods, such as LoRA or adapters, limit training to a small subset of parameters while keeping the base model frozen. This approach reduces memory requirements, minimizes the risk of eroding general capabilities, and makes fine-tuning practical even with constrained resources.

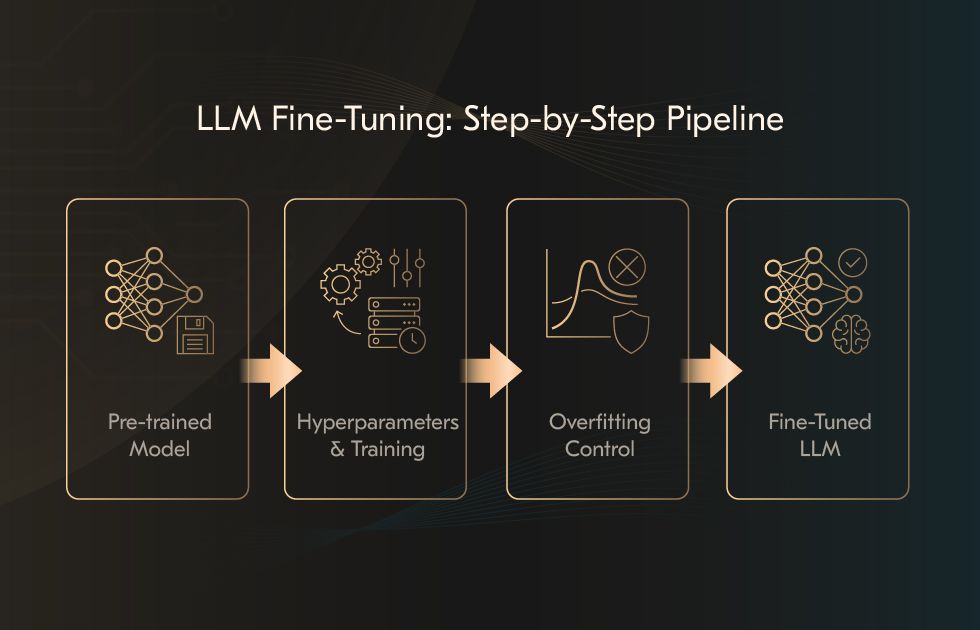

How to fine tune LLM: Step-by-step process

While tools and infrastructure may vary, mature implementations follow a consistent pipeline that spans dataset preparation, model selection, training setup, fine-tuning, model evaluation, deployment, and ongoing monitoring. Each stage builds on the previous one, and skipping or compressing any of them usually leads to later issues, including instability, rising costs, or quality regressions.

Step 1: Select the right pre-trained model

The starting model defines the boundaries of what fine-tuning can realistically achieve. If the base model is poorly aligned with the task, no amount of tuning will fully compensate for that mismatch. Model size is often the first consideration. Larger models typically offer stronger reasoning and broader language coverage, but they also introduce higher training and inference costs. In contrast, smaller models can be far more efficient and, when fine-tuned correctly, often deliver superior performance on narrowly scoped tasks.

Architecture is the next inflection point. Encoder-only models are well-suited to tasks that require understanding rather than generation, such as classification or entity recognition. Decoder-only models are designed for generative workflows and multi-step reasoning. Beyond architecture, the nature of the pre-training data matters. Models that have already seen similar domains tend to adapt faster and with fewer unintended side effects. One final checkpoint at this stage is licensing and compliance. Constraints here shape options of deploying fine-tuned LLMs and should be resolved before any technical work begins, not after.

Step 2: Train parameters and hyperparameter tuning

Once the base model is selected, attention shifts to how it will be adapted. Hyperparameters govern learning dynamics and, in practice, are a common source of instability. The learning rate controls how aggressively the model updates its internal representations. Set too high, it can overwrite practical pre-trained knowledge; set too low, it may fail to produce meaningful change. Batch size introduces a similar trade-off between stability and efficiency, influencing both memory usage and convergence behavior.

Epoch count and optimizer choice further shape outcomes. In most fine-tuning scenarios, only a limited number of passes through the data are required before additional training begins to harm generalization. To manage these interactions systematically, teams increasingly rely on automated model tuning methods that explore parameter combinations in a controlled and reproducible way rather than relying on intuition alone.

Step 3: Prevent overfitting and preserve model reliability

Overfitting occurs when the model learns the training data too well and loses the ability to generalize to new inputs. This often shows up as strong validation metrics paired with brittle behavior in production. Regularization techniques such as dropout and weight decay help limit this tendency by preventing the model from over-relying on narrow patterns. Early stopping adds another safeguard by halting training as soon as validation performance begins to degrade.

In most single-task scenarios, effective fine-tuning is achieved with 1,000 to 5,000 high-quality examples. Multi-task models, by contrast, often require 50,000–100,000 examples to avoid interference between tasks and preserve consistent behavior across domains.

Still, technical safeguards only work if the data itself is sound. Fine-tuning amplifies whatever signal the dataset provides, good or worse. That is why data quality often matters more than model choice or hyperparameter precision. Auditing datasets for bias, duplication, and inconsistent labeling is a core part of the tuning process. Parameter-efficient LLM fine-tuning methods introduce an additional layer of protection by limiting how much of the base model is altered, reducing the risk that specialized training erodes general language competence.

How to integrate and deploy LLM fine-tuning

Finetuning LLM test begins when a model leaves the controlled environment of experimentation and becomes part of a live system. This transition introduces new requirements, including latency, cost predictability, operational stability, security, and long-term maintainability. Integration and deployment in this case can define the success of a fine-tuned model.

Step 1: Deploy fine-tuned LLM into production environments

The deployment process typically starts by packaging the trained model for production runtime. The process includes exporting the model, preparing inference containers, provisioning hardware, and exposing stable APIs that downstream systems can depend on. At this stage, architectural decisions become irreversible, so the deployment strategy should be considered alongside fine-tuning.

Most teams choose between managed cloud platforms and self-hosted environments with distinct implications. Cloud deployment offers elasticity and operational simplicity, making it suitable for workloads with variable demand. At the same time, token-based pricing and shared infrastructure can introduce cost volatility at scale. Self-hosted or on-premises deployment shifts responsibility inward but provides tighter control over performance characteristics, resource utilization, and data boundaries. This trade-off is often decisive when models process sensitive or proprietary information.

Once the model is live, inference efficiency becomes central. Fine-tuned models are commonly optimized through quantization, reducing numerical precision to lower memory usage while preserving output quality. Inference engines designed for high-throughput workloads further optimize LLM performance by managing attention memory more efficiently and reducing fragmentation under concurrency. In distributed scenarios, model layers can be partitioned across multiple nodes, allowing large models to operate collaboratively without central bottlenecks. In some cases, inference can even be pushed to the client environment, eliminating server-side exposure. The next step is integrating fine-tuned LLMs into AI agents.

Step 2: Integrate fine-tuned models with agentic AI systems

Fine-tuned models are often embedded into systems that plan actions, call tools, and coordinate multiple steps. Here, fine-tuning LLM plays a structural role. Training data can encode these behaviors directly, allowing the model to internalize when and how to invoke specific functions.

This approach reduces runtime complexity and improves determinism through AI agent development. Tool usage becomes a learned behavior rather than a fragile set of instructions, making multi-step workflows more reliable. Alignment with standardized interaction protocols further simplifies integration by defining consistent interfaces between models and external systems. As a result, the model functions as a controlled component within a larger execution flow of multi-model AI system in an enterprise.

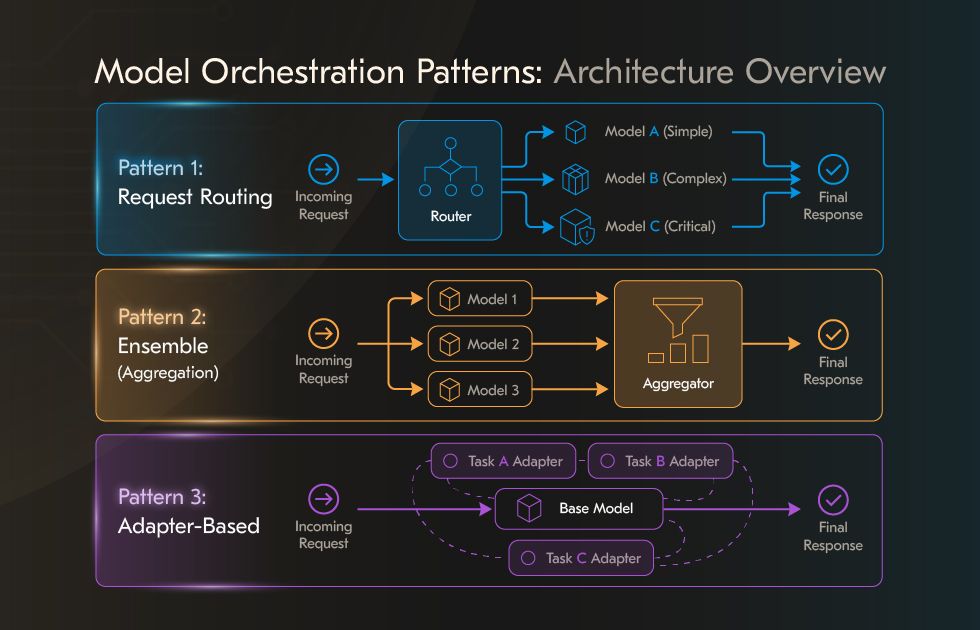

Step 3: Orchestrate multiple models for robust performance

When systems mature, reliance on a single model often gives way to coordinated model ecosystems. Different models or differently tuned variants of the same model handle distinct responsibilities. Some generate candidate outputs, others evaluate or aggregate them, and still others specialize in classification or routing. This separation improves robustness by avoiding single points of failure and allowing each model to operate within its strengths.

When systems mature, reliance on a single model often gives way to coordinated model ecosystems. Different models or differently tuned variants of the same model handle distinct responsibilities. Some generate candidate outputs, others evaluate or aggregate them, and still others specialize in classification or routing. This separation improves robustness by avoiding single points of failure and allowing each model to operate within its strengths.

Common model orchestration patterns include:

routing requests between multiple specialized models based on task complexity or risk,

combining outputs from several models to improve robustness on ambiguous inputs,

activating task-specific adapters within a shared base model to avoid complete duplication.

Each adapter encodes task-specific behavior and can be activated dynamically. To finetune LLM, this procedure helps avoid duplicating entire models while allowing behavior to evolve independently across tasks. Orchestration logic then determines which combination of models or adapters is appropriate for each request, based on confidence, latency constraints, or risk profiles.

Step 4: Monitor and improve continuously

Deployment marks the beginning of a feedback loop rather than the end of development. Real-world inputs rarely remain static, and model behavior inevitably drifts as language, data distributions, and usage patterns evolve. Without monitoring, this degradation often surfaces only through downstream failures.

Effective monitoring operates on several levels:

System metrics capture latency, throughput, and error rates.

Output-level checks assess structural validity, relevance, and safety.

Over time, drift detection compares current behavior against established baselines, signaling when the model no longer fits with its original performance envelope.

Sustaining quality requires structured update strategies. Some systems rely on periodic retraining to incorporate new data, while others trigger updates only when performance thresholds are breached. More targeted approaches use active learning to identify precisely where the model struggles and focus retraining efforts there. What matters is discipline with which they are planned, evaluated, and rolled out.

What are the advantages of fine-tuning LLM?

Finetuning Large Language Models models can reshape how a model behaves under real operational constraints. A pre-trained foundation model is intentionally broad, probabilistic, and adaptive. LLM finetuning introduces discipline. It narrows the model’s behavior, making outputs more accurate, consistent, and aligned with specific functional and technical requirements.

At a technical level, fine-tuning leverages transfer learning to reuse the linguistic and reasoning capabilities acquired during pre-training while selectively adapting the model’s internal representations to a narrower task space. This makes it possible to achieve specialization without the cost, time, or data volume required to train a model from scratch. More importantly, it allows teams to encode expectations directly into model behavior rather than repeatedly enforcing them through prompts, post-processing, or manual review.

Let’s review five core benefits of LLM fine-tuning.

1. Higher accuracy through task-specific optimization

Fine-tuning LLM improves accuracy by aligning the model’s probability space with the exact patterns required for a given task. Instead of relying on generic statistical associations, the model learns which signals matter and which can be ignored in a specific context. When trained on representative, high-quality examples, a fine-tuned model consistently outperforms a general-purpose model that relies solely on prompting, especially in edge cases that require precision rather than creativity.

2. Embedded domain knowledge

General models often struggle with specialized language because they treat domain-specific terms as low-frequency tokens rather than as structurally meaningful concepts. Fine-tuning an LLM corrects this by reinforcing how terminology is used, combined, and interpreted within a given domain. The result is better vocabulary coverage and improved semantic understanding of domain relationships, constraints, and implicit rules.

3. More predictable costs

Prompt-heavy systems incur both latency and cost overhead, especially when long system instructions are required to control behavior. Fine-tuning moves much of that behavioral logic into the model weights themselves. It reduces token usage at inference time, shortens response latency, and stabilizes cost profiles. In many cases, a smaller fine-tuned model can outperform a larger untuned model on a narrow task, making system performance more predictable and infrastructure requirements easier to plan.

4. Consistent tone, structure, and interaction patterns

Fine-tuning LLM models enables consistent output structure and response style across interactions. Rather than relying on prompts to enforce tone, formatting, or procedural steps, these expectations become part of the model’s learned behavior. This element is crucial for systems that must generate responses in accordance with defined templates, escalation rules, or communication standards. Consistency reduces the need for downstream normalization and simplifies integration with other systems.

5. Reduced hallucinations

Hallucinations are often a symptom of ambiguity rather than ignorance. When a model is unsure which behavior is expected, it fills gaps probabilistically. LLM tuning reduces this uncertainty by reinforcing what constitutes an acceptable response and what does not. By training on carefully curated examples, the model learns when to answer, when to defer, and how to stay within defined boundaries. It significantly reduces their frequency and severity in constrained tasks.

Key challenges of LLM fine-tuning and how to address them in practice

Fine-tuning Large Language Models is a distinct engineering discipline with its own constraints, risks, and long-term implications. While the goal is to adapt a general-purpose model to a specific task or domain, the process introduces challenges that directly affect reliability, cost efficiency, and governance in production.

Overfitting and underfitting risks

Overfitting occurs when a fine-tuned model aligns too closely with a narrow training dataset and fails to generalize to real-world inputs. In production, this often appears as brittle behaviour: the model performs well on familiar phrasing but breaks when inputs vary slightly. Mitigation requires disciplined validation practices, including early stopping based on validation loss, controlled learning rates, and regularization techniques such as dropout or weight decay. Underfitting represents the opposite failure mode, where training signals are too weak to meaningfully influence model behaviour, typically due to insufficient task signal or overly conservative hyperparameters.

Computational and resource constraints

Fine-tuning Large Language Models can be computationally expensive, requiring substantial GPU memory, long training cycles, and careful cost management. These constraints often limit iteration speed and experimentation, especially outside large research environments. To fine-tune LLM, the team needs to implement modern pipelines to address this through quantization, mixed-precision training, gradient checkpointing, and memory-efficient optimizers. Techniques such as QLoRA enable effective fine-tuning on significantly reduced hardware footprints without materially compromising output quality.

Data quality

The quality of its training data bounds the quality of a fine-tuned model. Noisy labels, inconsistent formatting, or ambiguous task definitions lead to unstable or unpredictable behaviour, even when training metrics appear strong. Effective fine-tuning pipelines invest heavily in data governance: clear annotation guidelines, reviewer calibration, dataset versioning, and systematic error analysis. Where labeled data is limited, semi-automated annotation workflows and carefully controlled synthetic data generation are often used to expand coverage without weakening the learning signal.

Bias and ethical risk amplification

Fine-tuning can unintentionally amplify biases in the training data or reinforce undesirable behavioural patterns if feedback loops are poorly controlled. Mitigation requires proactive dataset audits, evaluation across sensitive dimensions, and policy-enforcement layers that filter inputs and outputs. To fine tune Large Language Model, teams often adopt ethical risk management, a core design requirement for responsible deployment.

Model drift

Even a well-tuned model will degrade over time as input distributions, terminology, and operational contexts evolve. Without monitoring, this drift often becomes visible only after downstream failures accumulate. Production-grade fine-tuning, therefore, includes continuous evaluation against reference prompts, sampling-based human review, and predefined thresholds that trigger retraining or dataset refreshes. Fine-tuning LLMs is an ongoing maintenance process embedded in the broader model lifecycle.

![How to Integrate AI into Your Business: [A Comprehensive Guide]](/img/articles/how-to-integrate-ai-into-your-business-a-comprehensive-guide/img01.jpg)

![AI in Web Development in 2025: [Benefits, Trends & Use Cases]](/img/articles/ai-and-web-development-why-and-how-to-leverage-ai-for-digital-solutions/img01.jpg)