Over the last few years, enterprises have invested heavily in Artificial Intelligence. Some of them are discovering the limits of relying on a single model. A standalone Large Language Models (LLMs) can automate customer interactions, yet it cannot process images from a factory line. A vision model may detect defects, but cannot forecast market demand. When every function depends on one specialized model, the result is siloed solutions, isolated AI initiatives, high inference costs, fragmented compliance, and slower process decision-making.

The solution is not to build ever-larger models, but to combine them. Multi-model AI systems combine different LLM, computer vision, predictive analytics, or domain-specific models under a single framework. Let’s explore how multi-model AI can impact enterprise automation, where it delivers the most value, and what leaders need to know to adopt it effectively. If your organization is evaluating how to scale AI without escalating costs or compromising governance, talk to our experts.

What are multi-model AI systems?

Multi-model AI systems combine multiple specialized models into a single, orchestrated framework. Instead of expecting one system to do everything, enterprises align the strengths of many: LLMs for unstructured text, computer vision models for image and video data, and predictive algorithms for structured or time-series information. That impacts enterprise digital transformation so that companies generate richer insights, make faster and more reliable decisions, and extend automation across functions without being constrained by the limitations of a single model.

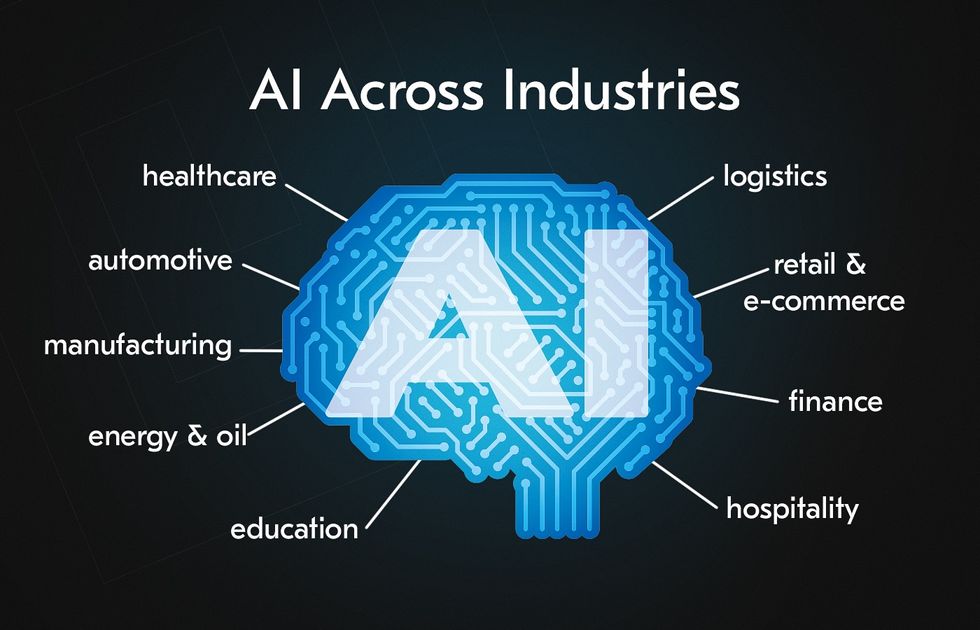

Why does this matter at the enterprise level? Because most critical processes are inherently multi-dimensional. Given that, let’s look at some industry applications:

Finance: Fraud detection and risk management cannot rely on transaction numbers alone; it also depends on language cues in communication and behavioral patterns over time.

Healthcare: A doctor diagnoses by examining scans, patient history, and lab results. Diagnostic systems can merge computer vision models analyzing X-rays and MRIs with LLMs processing clinical notes.

Logistics: A distribution planner cannot optimize routes without considering demand forecasts, weather updates, and real-time road conditions. Multi-model pipelines also enhance chatbot development in customer service, integrating voice, text, and transaction data for more accurate support and higher business process automation with AI.

Why enterprises need multi-model AI?

The cost trap of large models

Large Language Models are indispensable in text analysis and knowledge management. As the core of enterprise AI, it comes at a high price. Inference costs alone can consume up to 90% of AI budgets. Should a model trained to parse millions of documents be used to classify routine transactions or perform sentiment analysis on short messages? Enterprises that continue this practice risk tying up resources in neither sustainable nor strategic ways.

Multi-model systems solve the problem by assigning the right model to the right task. Lightweight predictive or vision models handle routine or structured workloads, while LLMs are reserved for functions where their complexity truly adds value.

Narrow specialization, fragmented outcomes

AI models are, by design, specialists. A vision system may excel at detecting anomalies in a manufacturing line, but it cannot forecast demand. A predictive model may be strong at risk scoring, but cannot interpret customer complaints. How many silos can an enterprise sustain before duplication of effort outweighs the benefit of specialization?

Multi-model AI breaks this cycle by enabling integration. In healthcare, for example, radiology images can be analyzed alongside clinical records and lab data to deliver a more complete diagnosis.

Regulatory and governance pressure

Can a single-model system consistently provide traceable outputs? The reality is often disappointing without enterprise AI automation. Multi-model systems impacts enterprise AI automation and introduces redundancy and cross-validation, allowing one model’s outputs to be checked against another’s. Implementing multi-model AI strengthens audit trails and improves bias detection.

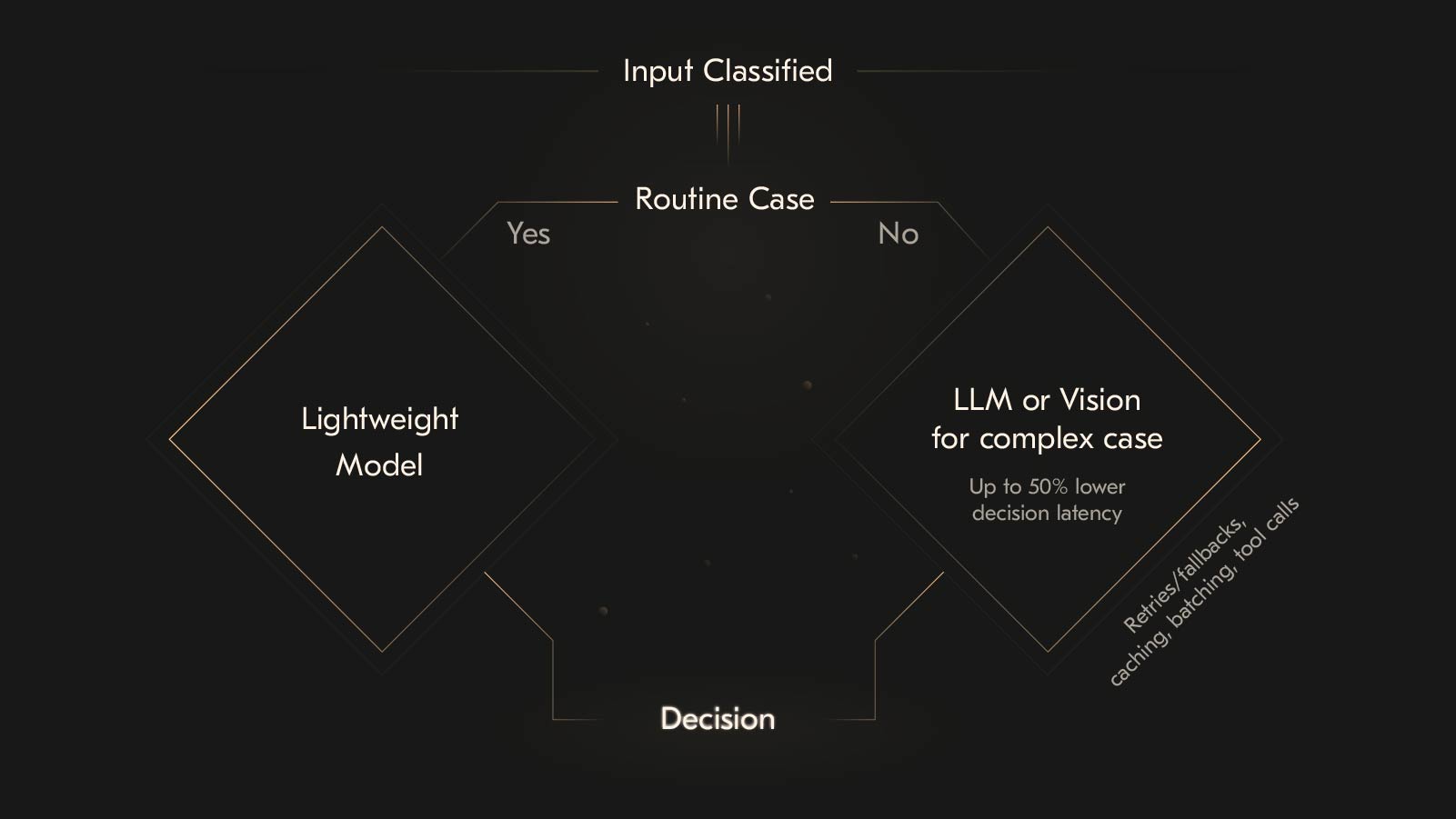

Decision speed in critical processes

Even seconds of delay can translate into lost revenue or heightened risk. Multi-model AI distributes workloads strategically: lightweight models handle routine checks and intervene in complex cases. This layered approach has cut decision latency by up to 50%, according to the International Journal of Scientific Research in Computer Science, giving enterprises more reliable logistics routing and quicker responses to operational risks.

Clear ROI evidence

The business case for multi-model AI is already visible in the numbers. A logistics provider reduced delivery delays after integrating predictive demand models with vision systems that analyze real-time traffic. A financial institution cut false positives in fraud detection, saving investigation costs and improving customer satisfaction.

Key components of multi-model AI systems

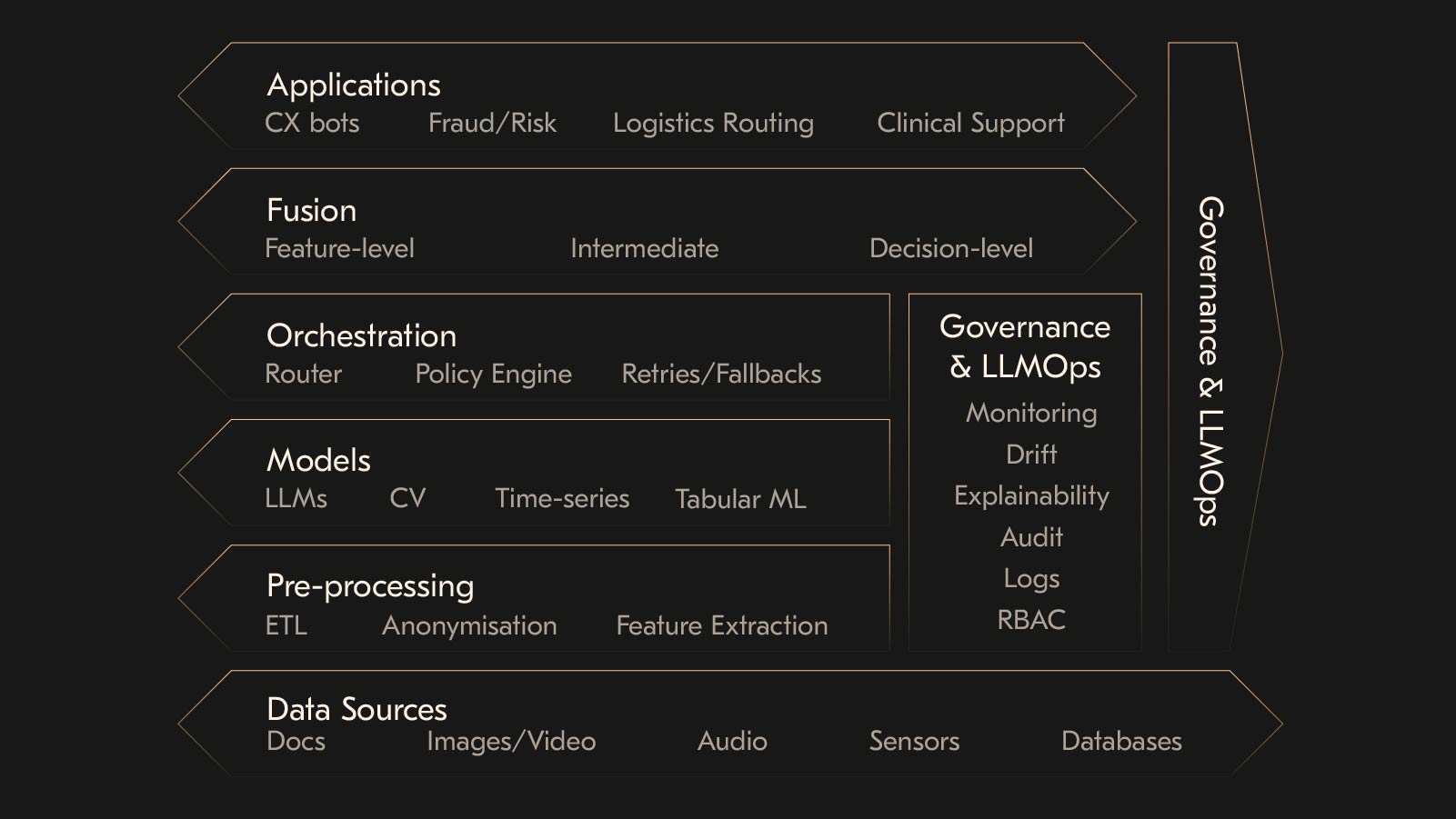

Designing and operating multi-model AI systems in an enterprise context requires more than stacking different models side by side. Below are the core components that define enterprise-grade multi-model AI scalability solutions.

AI orchestration

Orchestration is the operational core of multi-model AI. It ensures that different models, LLMs, computer vision systems, and predictive engines are connected to reflect business workflows. In practice, AI orchestration resembles a layered pipeline: raw inputs are preprocessed, routed to modality-specific models, then brought together and consolidated into an actionable decision.

Why does this matter? Because most enterprise processes are multi-dimensional. Take for a example manufacturing: an orchestrated system can combine camera-based quality checks, vibration data from machinery sensors, and spoken operator reports. Instead of analyzing these in isolation, orchestration integrates them into one decision pipeline.

LLMOps and model governance

The more models an enterprise deploys, the greater the need for oversight. Model governance and LLMOps for enterprises guarantee that multi-model systems are secure, transparent, and compliant with regulations. AI model governance frameworks cover several urgent areas:

Protecting sensitive information, whether patient records in healthcare or customer data in banking, across every pipeline stage.

Continuously auditing models to detect discriminatory patterns and implementing corrective mechanisms.

Applying explainable AI techniques to make decisions interpretable for auditors, regulators, and internal stakeholders.

Monitoring performance, detecting drift, versioning models, and maintaining audit trails to prove accountability.

Governance guidelines track data lineage, monitor model drift, enforce fairness constraints, and generate audit logs that regulators in finance, healthcare, or insurance can scrutinize. In practice, this means explaining why a fraud detection system flagged a transaction, or why a clinical decision support tool recommended a particular treatment. Multi-model AI may scale technically without robust governance, but will fail under regulatory scrutiny.

Inference optimization

If every decision requires simultaneous processing across multiple large models, latency grows and infrastructure costs escalate rapidly. Multi-model AI is computationally demanding because it processes multiple modalities in parallel. Inference optimization is therefore critical to ensure systems remain viable under enterprise-scale workloads.

Recent benchmarks show that modern multi-modal frameworks can achieve average latencies of 38ms for complex tasks, and future architectures are expected to reach 23.5ms, which is a 71% improvement over current systems.

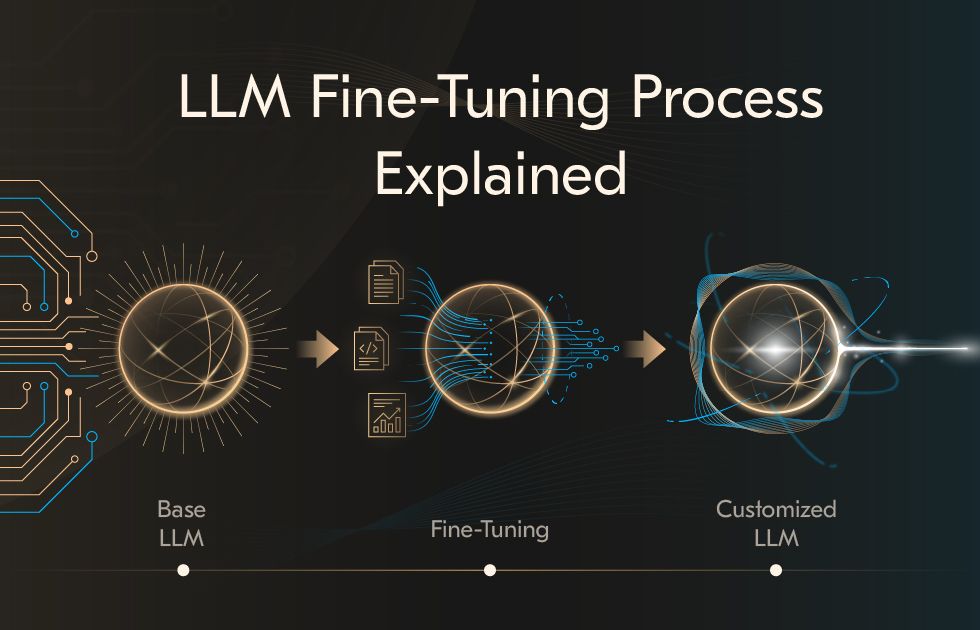

Transfer learning and fine-tuning

Multi-model systems typically rely on large foundation models pre-trained on vast, heterogeneous datasets covering text, images, audio, and structured signals. These models are not inherently optimized for specific domains or tasks. Transfer learning and fine-tuning address this gap for enterprise AI automation.

Transfer learning reuses pre-trained representations to accelerate learning in related tasks. Instead of starting from random initialization, a model begins with parameters already shaped by general-purpose knowledge.

Fine-tuning takes this further by refining the pre-trained models with targeted datasets. This process aligns the system with domain-specific vocabulary, data distributions, and operational requirements.

Multi-model integration

The most visible component of multi-model AI is integration itself: combining different modalities. If transfer learning aligns individual models to context, multi-model integration means these models function together as part of a cohesive system. AI integration addresses the challenge of combining insights from language, vision, numerical, and sensor-based models into a single, coherent output that enterprises can use.

AI integration mechanisms can be structured along several levels:

Feature-level fusion, where raw or early-processed data representations are merged to allow deep cross-modal interaction.

Intermediate fusion, aligning latent representations to capture correlations and dependencies between modalities.

Decision-level fusion, where outputs from separate models are aggregated for final prediction or classification, is often preferred in risk-sensitive contexts requiring independent validation.

Multi-model integration increases system reliability by allowing models to cross-validate outputs. Keep the note: AI integration enhances adaptability. When new modalities can be added to existing pipelines without redesigning the entire system.

How much does multi-model AI systems implementation cost?

Direct cost drivers

Infrastructure demands

Running multi-modal AI systems requires high-performance hardware that simultaneously processes text, images, audio, and video. Beyond raw compute, additional storage capacity, high-throughput networking, and advanced cooling systems further increase capital and operational outlays.

Training expenses

Costs to train multi-modal models can run into the millions of dollars for organizations that train in-house. To illustrate the magnitude of this challenge: according to McKinsey, a model that cost around $100,000 to train in 2022 could still cost nearly $2,000 in 2025, despite advances in optimization. While the per-unit cost of computing has declined, the scale of models continues to grow, keeping overall training budgets high.

Inference costs

Serving multi-modal models in production introduces another layer of expense. Inference workloads are significantly more demanding compared to unimodal models. It can often exceeds the cost per token or per processed input. Achieving acceptable latency levels typically requires over-provisioning compute resources, further escalating infrastructure costs.

Hidden and indirect costs

Integration with legacy systems

Few organizations operate on modern, uniform infrastructure. Custom middleware, APIs, and rigorous testing are typically required. These AI integration in operations efforts can equal or even surpass the original development budget.

Security overheads

More data flows, endpoints, and interactions between components increase the potential vectors for intrusion. In a big enterprise, the protecting sensitive inputs and outputs demands robust encryption, continuous monitoring, and advanced intrusion detection systems.

Compliance

Multi-modal systems process highly sensitive data that falls under strict regulatory standards. Compliance with frameworks requires ongoing audits, documentation, and model interpretability mechanisms.

Operational support

Beyond infrastructure, building and maintaining multi-model AI systems demands specialized human capital. A team of skilled ML engineers, data scientists, and DevOps professionals are required to sustain operations.

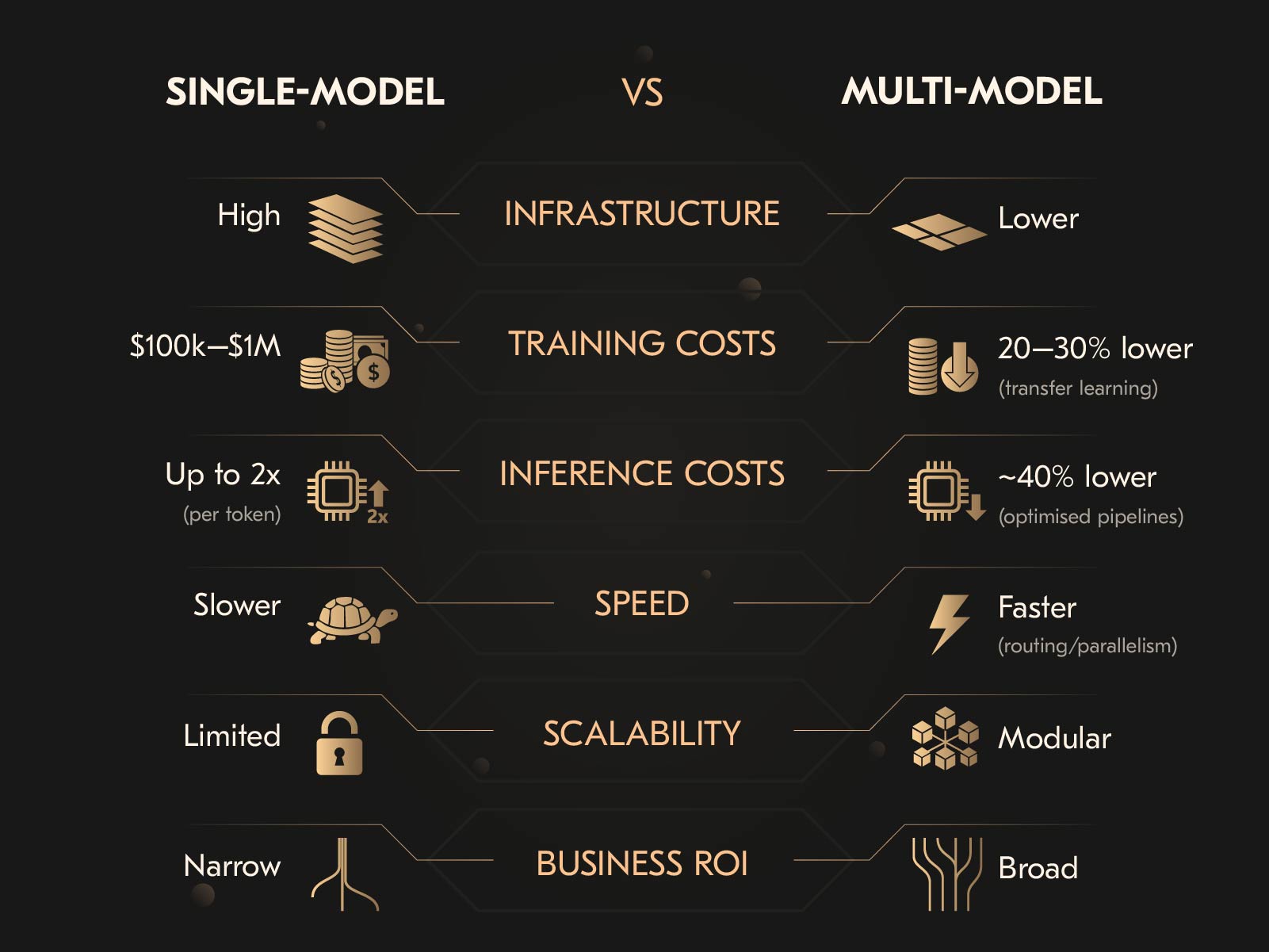

| Factor | Standalone LLM | Multi-model AI system |

| Infrastructure | High large clusters for single workloads | Lower orchestration reduces redundant compute |

| Training costs | ~$100K–$1M depending on scale | 20–30% lower with transfer learning |

| Inference costs | Up to 2× higher per token | Optimized pipelines cut costs by ~40% |

| Speed | Slower inference latency increases as models grow in size | Faster optimized routing and orchestration reduce latency |

| Scalability | Limited retraining needed for new tasks | Modular new models added without retraining |

| Business ROI | Narrow effective only in single domains | Broad cross-modal accuracy, cost savings ~30% |

What are the multi-model AI implementation challenges?

Data synchronization

First comes the question: how do you make different data types speak the same language? Data like rext, images, audio, and sensor data carry information in unique structures. Even slight misalignments can distort meaning and degrade model performance.

Data privacy

Cross-modal AI integration increases the likelihood of re-identification for data protection. Security strategies must therefore operate at multiple layers: data encryption in transit and at rest, secure model endpoints, adversarial defense mechanisms, and strong access AI model governance. The risk of data breaches escalates as the number of modalities grows.

Data quality

While text and image datasets are abundant, domain-specific multimodal datasets remain scarce and unevenly distributed. Industry applications face shortages of high-quality, diverse, and well-labeled multimodal data. Systems risk overfitting to narrow scenarios without robust datasets, limiting generalization and introducing bias.

Hallucinations

Even with quality data, multi-model systems are prone to another risk: hallucinations.

The error can cascade across the pipeline when one modality produces incorrect outputs. For instance, a transcription error in speech data may distort NLP processing, affecting downstream decision layers.

Human-AI interaction

Effective human-AI interfaces must integrate text, vision, and audio outputs in intuitive and actionable ways. Poorly designed interfaces can undermine trust and limit adoption, even if the underlying models perform well. Designing effective multimodal interaction frameworks requires collaboration of AI, UX specialists, and domain experts.

Best practices for building multi-model AI in enterprises

Start with Proof of Concept

A Proof of Concept provides a controlled environment to validate data readiness, pipeline stability, and cross-modal performance. Partnering with experienced providers of AI & ML consulting helps organizations design these pilots strategically and scale them into production.

Use hybrid models

Multi-model AI development does not need to be a rigid choice between proprietary solutions and open-source frameworks. From the implementation experience, a hybrid approach often proves most effective for industry enterprises. Combining it allows benefiting from the adaptability of open ecosystems. Hybrid architectures offer a protective layer against vendor lock-in for critical systems.

Establish a robust CI/CD pipeline for AI

Once models move into production, the difficulty shifts from development to maintenance. A robust CI/CD pipeline for AI becomes integral. These pipelines automate testing, deployment, and rollback of models. It helps environments to address consistency in operations.

Monitor and audit models

Deployment is not the end of the lifecycle. Engineers to enable multi-model systems must monitor drift, bias, or unexpected failures across modalities. Effective oversight of a professional team demands tracing decisions through preprocessing, feature fusion, and model outputs.

Build cross-functional teams

Multi-model AI is the connecting point of engineering, data science, and business in enterprise AI automation. Limited discipline creates blind spots. As technical feasibility without business alignment, or compliance frameworks without operational scalability. Cross-functional teams address this by combining IT infrastructure, data science, and business strategy expertise.

We, at Acropolium, have embedded this cross-functional approach into every project, aligning technical innovation with regulatory and business objectives. Our teams have spent over two decades building complex systems for different industries.

6 tips on how to choose the right AI development partner

Selecting an AI development partner for multi-model systems sets the foundation for how effectively an organization can operationalize advanced AI. Keep reading to determine what essential points you should verify with the vendor and the opening questions checklist.

1. Experience in multi-model integration

The first measure of a partner is the depth of technical knowledge. Multi-model AI requires proficiency across AI domain, and more importantly, integrating them into cohesive systems. It is not enough to have siloed expertise in each field. What matters is proven experience in combining these modalities.

2. LLMOps expertise

Moreover, multi-model systems demand advanced LLMOps for enterprises. From it automated CI/CD pipelines should be used for model deployment, monitoring tools, and governance frameworks for transparency across interacting models. Consider looking for a vendor that adapts these practices to the specific challenges of multi-model pipelines.

3. Data management capabilities

AI systems are only as strong as the data on which they are trained. Remember that a reliable AI agents development partner should demonstrate sophisticated approaches to curating data across modalities, aligning text with video, or synchronizing sensor signals with audio.

4. Ethical governance and compliance

A credible partner have to consider ethics and compliance but will weave them into system design. Governance requirements include securing sensitive data through encryption and access controls, and embedding bias detection and fairness techniques into training.

5. Scalable infrastructure and operational readiness

Training multi-model architectures can cost millions of dollars in compute, and inference workloads are often up to twice as expensive per token as text-only models. A reliable vendor should provide scalable infrastructure and, even more, optimize it through distributed training, pipeline parallelism, and inference compression.

6. Proven enterprise automation cases

The most credible partners bring a portfolio of business process automation with AI cases. Whether streamlining document-heavy processes, augmenting AI-powered decision-making with real-time multi-modal insights, or building adaptive workflows that reduce operational latency, these cases validate the ability to build complex AI infrastructure into different operational systems.

When assessing a partner, decision-makers should press beyond claims of technical expertise. Asking the right questions demonstrates whether the organization has the depth to deliver.

How do you reduce the cost of inference? Explaining how they optimize inference pipelines with compression, dynamic routing, and distributed architectures to cut latency and infrastructure costs.

Do you have cases in regulated industries? Highlighting real-world finance, healthcare, or logistics projects, showing how they navigated strict compliance requirements.

How do you approach AI governance? Detailing frameworks for bias auditing, transparency, and compliance monitoring embedded across the whole model lifecycle.

What is your approach to data alignment and synchronization? Demonstrating how they handle heterogeneous data streams for temporal and semantic alignment across modalities.

How do you ensure model scalability without cost escalation? Outlining AI scalability solutions for distributed training, inference growth, and infrastructure scaling.

What monitoring and auditing mechanisms do you provide post-deployment? Showing how they track drift, bias, and compliance continuously, with automated reporting and retraining strategies

These questions are a small checklist to evaluate an AI development partner’s expertise and experience. At Acropolium, we bring over two decades of engineering excellence and a strong focus on AI, with projects ranging from modernizing a transportation management system with AI to building AI-powered fraud detection software for banking. Our experience spans regulated domains such as predictive analytics in healthcare, intelligent automation for hospitality with hotel self-check-in kiosks, and smart hotel room management.

With 400+ delivered applications, 148 partners and customers, and long-term collaborations that include Fortune 500 companies and startups grown into unicorns, we have built solutions that stand the test of scale, compliance, and complexity. Partner with us to build a scalable and future-proof multi-model AI system.

![AI in Retail: [Use Cases & Applications for 2025]](/img/articles/ai-in-retail-use-cases/img01.jpg)

![AI in Web Development in 2025: [Benefits, Trends & Use Cases]](/img/articles/ai-and-web-development-why-and-how-to-leverage-ai-for-digital-solutions/img01.jpg)

![6 AI Use Cases in Education in 2025: [Benefits & Applications]](/img/articles/6-ai-use-cases-in-education-transforming-the-learning-experience/img01.jpg)

![Integrating Artificial Intelligence for Business Applications [A 2025 Guide]](/img/articles/artificial-intelligence-for-business-applications/img01.jpg)