Most organizations that adopted chatbots in earlier waves now face a similar realization: the chatbot works, but it doesn’t scale. It answers common questions, handles predictable workflows, and may even reduce service volume. But it doesn’t improve over time, doesn’t adapt to new conditions, and doesn’t reliably contribute to measurable business outcomes.

People no longer tolerate waiting in queues, repeating information across channels, or browsing knowledge bases to find what should be available instantly. The introduction of retrieval-augmented generation (RAG) was a turning point. It allowed chatbots to ground responses in verified internal knowledge rather than relying solely on a model’s reasoning. Suddenly, chatbots could provide accurate, context-aware answers and support real operational workflows. But RAG alone does not create a reliable system.

Without defined chatbot KPIs, governance, and accountability, the system gradually drifts, responses become inconsistent, knowledge becomes outdated, and trust declines. In 2026, a chatbot strategy determines what the chatbot is responsible for, how it learns, how it is monitored, and how its behavior is changed over time.

This guide focuses on how to build that foundation: starting with clear goals, measurable outcomes, and a governance model for a consistent, safe, and valuable enterprise chatbot strategy.

What is enterprise chatbot strategy in 2026?

An enterprise chatbot strategy is a structured plan for how conversational systems support business objectives, are architected and governed, and how their performance is measured over time. It aligns business goals, technical architecture, data management, security controls, operating responsibilities, and KPI frameworks into a coherent model that can be managed and scaled.  Enterprise chatbot development becomes strategic when its purpose, behavior, data access, and lifecycle are defined deliberately and in a repeatable way. The strategy establishes:

Enterprise chatbot development becomes strategic when its purpose, behavior, data access, and lifecycle are defined deliberately and in a repeatable way. The strategy establishes:

What the chatbot is expected to achieve (e.g., reduced support workload, improved onboarding efficiency, increased conversion precision).

How it is built and deployed (architecture, integrations, security boundaries).

How it evolves (change control, continuous evaluation, retraining).

How success is measured (operational, financial, and user impact chatbot KPIs).

A durable strategy rests on four interdependent pillars: people, process, technology, and data. Each must be defined before deployment and actively managed afterward.

People

The organizational structure behind the chatbot determines how consistently it can be developed, maintained, and improved. A clearly identified product owner is responsible for prioritization and roadmap decisions, ensuring the system evolves intentionally instead of reactively. The objective is to establish clear responsibility boundaries and repeatable operational routines.

Process

The process component defines how the system behaves and evolves over time. Conversation patterns, escalation logic, and fallback rules need to be specified to ensure users receive consistent support. Any modification, whether updating prompts, adding new intents, or integrating an additional data source, should follow a structured change control process to avoid unexpected shifts in system behavior.

Technology

The technical architecture establishes how well the chatbot scales, how secure it is, and how feasible it will be to adapt over time. Most enterprise deployments now rely on hybrid conversational architecture, using retrieval-grounded context to maintain factual accuracy while leveraging generative models for natural language fluency.

Data

Data determines whether the chatbot can produce reliable, context-aware responses. The knowledge sources it relies on must be authoritative and maintained, with clear ownership for content updates and versioning. Retrieval and indexing processes should be structured so that changes are traceable and reversible. Data quality directly influences response reliability; outdated or inconsistent knowledge leads to inconsistent or incorrect answers, regardless of model capability.

What are the business use cases of applying a chatbot strategy?

Customer operations

In customer-facing environments, the primary objective is to handle routine queries at scale while preserving service quality. Chatbots can resolve a substantial share of repetitive inquiries, particularly when combined with structured flows and retrieval from internal knowledge sources. When these interactions are automated, support teams see measurable improvements in operational performance:

Response latency decreases because customers receive immediate answers rather than waiting in the queue.

Average handling time is reduced when the chatbot collects initial details before escalation or resolves the inquiry entirely.

First contact resolution improves when responses are grounded in a consistently maintained knowledge base.

24/7 availability ensures coverage during off-hours and peak surges without staff fluctuation.

Guided discovery and conversion support

In sales and acquisition contexts, chatbots function as structured decision-support layers. They improve the quality and timing of engagements. Key applications include:

Lead qualification: Collecting intent signals and segmenting prospects before routing to sales reduces cycle time and improves lead-to-opportunity conversion.

Guided product discovery: By narrowing choices based on stated criteria, chatbots reduce cognitive effort for the customer.

Upsell and cross-sell: When connected to CRM and product data, the system can reference prior behavior, contract tier, usage, or purchasing history to recommend appropriate next steps.

Employee productivity

Internally focused chatbots are designed to reduce friction in daily operational workflows. Large organizations often face a common issue: employees spend time searching for information, confirming policy interpretations, or submitting routine service requests.

Effective internal chatbots address these bottlenecks by:

Answering policy and procedure questions from a single maintained source.

Performing structured IT or HR requests (e.g., password resets, onboarding steps, benefits lookup).

Surfacing institutional knowledge that may exist across multiple document stores.

Compliance

In regulated sectors, the role of conversational systems expands from efficiency to risk control. Structured chatbots can enforce consistent language, ensure that only approved guidance is shared, and prevent unsupported claims or unauthorized communication. Logging and traceability provide a centralized audit trail, which is critical when demonstrating AI compliance, investigating incident histories, or identifying systemic knowledge gaps.

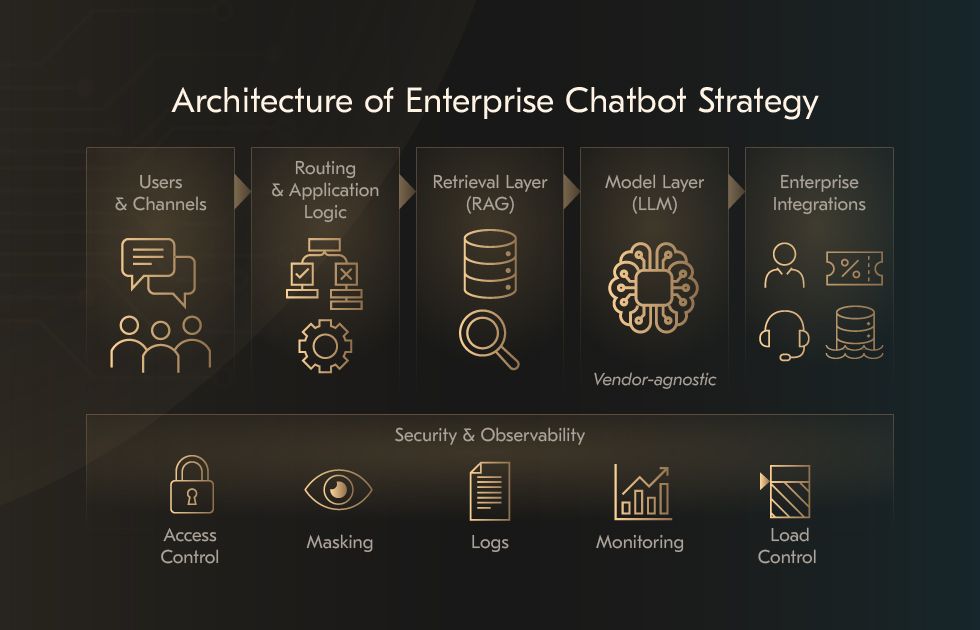

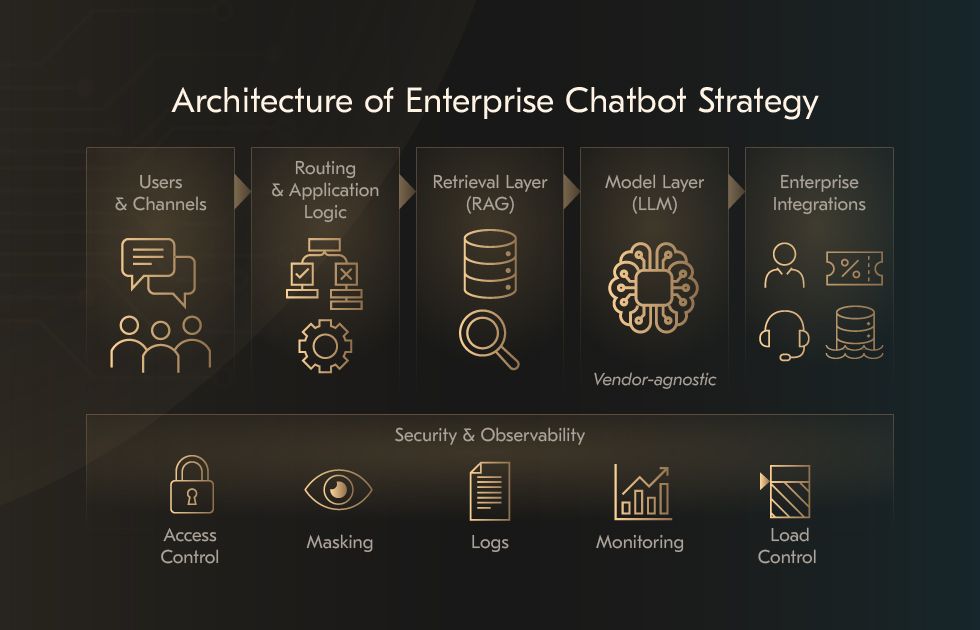

What is the architecture of enterprise chatbot strategy?

The architecture of an enterprise chatbot determines how reliable it will be in daily use, how easily it can be expanded to more workflows, and whether it will be maintainable over time. The key architectural decisions are about data access, system boundaries, and how the chatbot interacts with the organization’s core applications. A sustainable approach favors modularity, traceability, and the ability to adjust components without rebuilding the system.  The architecture should remain vendor-agnostic, enabling the organization to replace the underlying LLM without reworking integrations. Retrieval, routing, and application logic should be separated from the model layer to avoid lock-in.

The architecture should remain vendor-agnostic, enabling the organization to replace the underlying LLM without reworking integrations. Retrieval, routing, and application logic should be separated from the model layer to avoid lock-in.

Most organizations evaluate three architectural paths:

Platform-based

Useful when speed to deployment is a priority and the expected use cases are standardized. These systems offer pre-built conversation flows and connectors, but they are limited when deeper workflow automation or data-sensitive logic is required. They work best when the scope remains narrow and predictable.

Custom implementations

A custom system provides autonomy over data flow, inference logic, and storage. It is appropriate when data residency or regulatory obligations require clear control boundaries, or when workflows depend on proprietary internal systems. This approach demands ongoing ownership, not one-time development.

Hybrid with retrieval-augmented generation

That approach has become the most durable pattern in enterprise settings. Instead of expecting the model to “know” the domain, the chatbot retrieves relevant internal information at query time and generates responses grounded in that context.

A chatbot that only responds in conversation mode provides limited value. A chatbot becomes operationally meaningful when it can take action inside existing business applications. This requires reliable API-level integration, structured access rules, and consistent identity handling.

Typical integration points include:

CRM systems (e.g., Salesforce, HubSpot) to retrieve account context and update records.

ITSM platforms (e.g., ServiceNow, Jira Service Management) to create or update service tickets and support workflows.

Contact center platforms (CCaaS) to route conversations and transition seamlessly to human agents with full context.

Enterprise data lakes or document repositories for retrieval grounding.

Observability and logging systems to track performance, detect anomalies, and support audits.

If the chatbot can act manually available to a user with equivalent access rights, the integration boundary is correct.

Conversational systems interact with personal data, internal knowledge, and occasionally operational tools. Key architectural safeguards include:

Inference, retrieval, and workflow execution layers should be separated. If one layer fails or behaves unexpectedly, the others must remain contained.

Filtering, masking, and explicit rejection rules prevent unintended capture or exposure of personal or regulated information. Logs used for analysis must be anonymized before storage.

Authentication only through centrally managed identity systems, not stored keys or static credentials embedded in code.

Load controls prevent cascading failures and ensure the system remains responsive during peak usage.

What governance is needed for a chatbot?

Governance provides the structure that keeps a chatbot reliable as it scales. Without clear ownership and decision boundaries, conversational systems tend to fragment: different teams update prompts independently, data sources drift out of sync, and safety risks accumulate quietly.

Domain ownership must be explicit. A data steward governs knowledge sources and updates; a security owner controls access and encryption; a response quality owner oversees evaluation pipelines; and a productivity owner manages KPI interpretation. Prompts and agent policies should follow version control with review and approval workflows. Interaction logs are stored for 12–24 months with PII masked to maintain auditability without compromising privacy.

Best practice 1: Roles and responsibilities

LLM governance begins with assigning responsibility for how the system behaves, evolves, and is supervised. The structure does not need to be complex, but it must be explicit.

Product owner: Responsible for defining the chatbot’s purpose, prioritizing its scope, and aligning it with operational needs. The Product Owner ensures changes reflect real user demand, not assumptions.

AI lead: Oversees model behavior, evaluation pipelines, and technical decisions. This role ensures the chatbot performs predictably, remains within guardrails, and is improved through measurable iteration rather than ad-hoc adjustments.

Data steward: Owns the quality and integrity of the knowledge the chatbot relies on. The Steward controls which sources are authoritative, how content is updated, and how retention, masking, and access rules are enforced.

Security: Defines access controls, auditing requirements, and privacy boundaries. This role ensures the chatbot does not expose or learn from data it should not have access to, and that its operation aligns with legal and regulatory expectations.

Best practice 2: Versioning and incident handling

A chatbot is only as reliable as the knowledge it draws from. Governance requires a single source of truth for content. When multiple teams maintain their own knowledge documents, inconsistency appears in responses. Establishing a central knowledge repository and a routine review cycle prevents this drift.

Prompt management also requires discipline. Prompts and behavioral instructions should be versioned, reviewed, tested against evaluation sets, and rolled out through a structured release process. Uncontrolled prompt editing is one of the most common causes of unpredictable system behavior.

Incident management policies define what happens when the chatbot cannot answer, behaves incorrectly, or receives a request outside its scope. The escalation path must be designed, tested, and maintained so that users are not left in dead-end conversations.

Best practice 3: Safety, fairness, and human oversight

Chatbots need safeguards to prevent them from reinforcing bias, producing misleading guidance, or generating inappropriate responses in the context in which they are used.

Bias and fairness evaluation should occur on a recurring schedule using representative conversation samples. Responsible AI focuses on preventing harmful, speculative, or directive responses in sensitive situations. In many domains, the most appropriate course of action is to defer.

Best practice 4: Change control

A structured approval workflow requires that every change, passes through review, offline testing, and regression evaluation. Furthermore, it prevents well-intended improvements from causing unintended degradations elsewhere in the system. If a new configuration produces unexpected interactions, the system needs a fast path to revert to a known stable state.

Governance does not slow progress; it keeps progress stable. It allows a chatbot to expand in scope without losing reliability, trust, or operational clarity.

The KPI framework for AI chatbot strategy

AI chatbot strategy is only effective if its performance can be measured with clarity and acted upon consistently. Chatbot KPIs should not be collected solely for reporting; they should also guide decisions on refinement, escalation rules, integration priorities, and the scope of future automation.

The current McKinsey report shows that over 80% of organizations adopting generative AI have not yet translated these initiatives into measurable financial results. The determining factor is not model capability, but whether the deployment is tied to clear objectives, owned knowledge, and accountable improvement processes.

Performance must be evaluated on safety and response quality. Groundedness, hallucination rate, and harmful output checks require the same level of monitoring rigor as business-impact chatbot evaluation metrics.

Core chatbot evaluation metrics and benchmarks

| Category | KPI | Definition | 90-day target | Context |

|---|---|---|---|---|

| Operational | Containment rate | % of sessions resolved without human intervention | 35-55% | Indicates whether the system is taking meaningful workload off the support function. |

| Quality | First contact resolution | % of interactions fully resolved in one session | 60-75% | Higher FCR means fewer callbacks, handoffs, and repeated effort. |

| Efficiency | AHT impact | Change in average handling time where agents are involved | -15-25% | Reduction comes from cleaner context transfer and agent-assist, not agent replacement. |

| Financial | Cost per contact | Total cost of support/number of interactions | -20-40% | Cost improvements materialize as stabilizing operational load, not immediate headcount cuts. |

| Human Support | Agent assist adoption | % of eligible conversations where the bot provides useful support | 70%+ | Reflects whether the system complements human work rather than bypassing it. |

| Risk | Hallucination rate | % of responses flagged as incorrect or speculative | less than 1% | Critical for reliability in regulated or high-trust workflows. |

| Engagement | CSAT / NPS delta | Difference between bot-handled and agent-handled interactions | ≥0 | The goal is parity first; improvement follows once trust is earned. |

| Business | Conversion lift | Increase in conversion when chatbot assists in the journey | +5-12% | Relevant in commerce and transactional flows where guidance influences decisions. |

Operational chatbot performance metrics KPIs measure outcomes, but not all outcomes are easily quantifiable.

Operational chatbot performance metrics KPIs measure outcomes, but response quality metrics determine why those outcomes occur. Three dimensions are essential:

Groundedness. The degree to which responses are supported by verifiable internal data or retrieved context.

Helpfulness. Whether the response meaningfully advances the user toward their goal. Helpfulness is best evaluated on representative conversation samples, not scores alone.

Safety. Ensures outputs remain within acceptable behavioral and regulatory boundaries.

To maintain consistency over time, the system requires stable datasets of chatbot evaluation metrics rather than ad hoc manual checks. Two dataset types are standard:

Challenge/evaluation sets: Curated examples representing real usage, ambiguous phrasing, and known edge cases. Used for offline evaluation before release.

Golden datasets: High-quality conversation examples that reflect correct behavior. Used for training and regression testing.

How to observe the chatbot performance over time

The foundation of observability is a well-defined event model. At a minimum, every interaction should record the detected intent, the retrieval source (if used), the model response, and the interaction outcome. Observing how intents shift over time shows where additional knowledge, workflows, or escalation logic is needed.

Before release, offline evaluation allows teams to test expected scenarios, edge cases, and failure conditions. After deployment, evaluation shifts to live observation. Online A/B tests allow teams to compare response strategies, prompt versions, and retrieval parameters directly in production. Structured A/B cycles prevent unintentional regressions, which are common when prompts or knowledge sources are revised without controlled measurement.

A sudden increase in fallback rate is often the earliest signal of a problem. Alerts should be configured to detect this behavior immediately. Similarly, systems handling user-generated content require monitoring for personal data exposure. PII can enter a conversation unintentionally, and without appropriate controls, it may be stored or echoed back. Automated data-loss prevention filters, coupled with input sanitization and anonymization of stored logs, limit this risk.

What is the cost structure and payback curve of the AI chatbot strategy?

The economic impact becomes clear only when ongoing consumption, maintenance, and workforce effects are accounted for. A structured view of Total Cost of Ownership (TCO) and Return on Investment (ROI) helps determine whether the system is financially sustainable and when it is reasonable to expand its scope.

Total Cost of Ownership

The most significant ongoing costs are typically not development, but model usage and operations. Consumption-based pricing for large language models means that volume, context window size, and retrieval complexity directly affect recurring spend. These costs are manageable when designed intentionally, but they can escalate quickly when the system is allowed to grow without boundaries.

A realistic TCO model usually includes:

Model access: Costs for generating responses (tokens in, tokens out). Prices vary across providers, but the dominant factor is volume.

Vector search: Systems using RAG store internal documents as embeddings and query them for grounding.

Observability: A production chatbot must be monitored like any other service: latency, availability, escalation rates, and response correctness require continuous review.

Operational support: Even a well-automated system requires human stewardship.

Chatbot ROI

The financial return comes from changes in how work is distributed. When the chatbot resolves routine inquiries, fewer agent hours are required. A lower number does not always mean fewer staff; in many cases, it means less overtime, fewer backlog spikes, and more consistent queue handling. Cost reductions per contact typically account for the largest share of financial benefits.

When workflows are structured clearly, users require fewer repeat contacts. Such action reduces total contact volume, not just frontline workload. In transactional contexts, chatbots support product discovery, account guidance, and checkout guidance. If you want to assess the impact before scaling further, you can model it directly using your own volumes and cost baselines. Estimate your ROI in 15 minutes with a TCO and savings calculator for your contact center. Try ROI calculator

Example scenario

Consider an organization handling 1,000,000 support interactions per year, with an average fully loaded human-handled contact cost of $12. If the chatbot reliably automates 70% of inquiries, and the price per automated interaction (including inference, retrieval, and monitoring overhead) averages $4, the annual economics look as follows:

Baseline human-only cost: 1,000,000 × $12 = $12,000,000 / year

Post-deployment cost:

700,000 automated × $4 = $2,800,000

300,000 human-handled × $12 = $3,600,000

New total annual cost: $6,400,000

Annual savings before TCO: $12,000,000 − $6,400,000 = $5,600,000

Practical roadmap for building an enterprise chatbot strategy

Many pilots fail because they are deployed broadly before reliability and measurement foundations are in place. The disconnect is visible at the macro level: 80%+ of companies report no meaningful earnings impact from GenAI, according to McKinsey. Even though the technology has the potential to contribute 0.1–0.6 percentage points to annual productivity growth on its own.

Stage 1: Pilot and guardrails

The purpose of the pilot is to validate whether the chatbot can perform reliably in one or two real workflows. This phase works best when the scope is intentionally narrow. Selecting three to five high-frequency intentions provides enough volume to measure effectiveness while keeping complexity manageable. These are usually repetitive, procedural tasks that require limited contextual interpretation.

During the pilot, guardrails should be established early. The chatbot must have a defined escalation path to a human and disclose that it is automated. The knowledge base should be carefully reviewed before use; errors in its content make the model appear less trustworthy than it is. This phase should also include a basic evaluation process that tracks the number of interactions completed without human assistance, where and why escalations occur, and how often the system fails to recognize user intent.

The pilot of the conversational AI roadmap is successful when the chatbot demonstrates consistent behavior within a limited scope and when there is clarity on what improvements would allow for expansion.

Stage 2: Integration and expansion

Once reliability is established, the system’s usefulness depends on its integration with operational systems. At this stage, the chatbot should start interacting with CRM, IT service management, and internal business applications rather than simply retrieving information. Integration allows the chatbot to take action, such as creating a ticket, checking an order status, updating account details, or generating a report.

Stage 3: Advanced and proactive capabilities

Once the chatbot is well-integrated and operational processes are stable, the system can begin to support more advanced interaction patterns. More advanced forms of automation should be introduced gradually and tested in controlled environments. These capabilities require strong governance to prevent unintended system behavior and maintain compliance with obligations.

If you are at the stage of confirming feasibility or shaping the first controlled deployment, it may be helpful to structure a focused pilot around three to five high-impact workflows. Ready to pilot in the next 90 days? We offer a short discovery workshop led by an AI architect and solution lead to help define scope, guardrails, and evaluation criteria. Book discovery call

What are the operating models of chatbot strategies?

Role assignment and decision boundaries (RACI)

Clear ownership prevents drift, inconsistent updates, and uncontrolled variations of the system. A RACI structure helps formalize who executes changes, who approves them, who should be consulted, and who simply needs visibility. Four domains require explicit responsibility boundaries:

Releases should have a designated owner who coordinates deployment timing, testing, and rollback procedures.

Prompt and behavior changes should be handled by someone who understands language and context.

Data governance remains under the authority of the data team.

Security controls belong to the security and compliance functions, not development.

Service-level agreement (SLA)

Chatbots in production environments require the exact reliability expectations as any customer-facing service. Availability, latency, and response accuracy should be defined before deployment, not after issues arise.

Availability targets generally align with the reliability of adjacent systems. For most organizations, 99.9% uptime is a reasonable baseline.

Latency expectations should reflect conversational norms. When responses exceed a second, the interaction begins to feel mechanical or obstructive.

Response quality is measured through grounded metrics. Self-service resolution rate, escalation rate, and the success rate of recurring workflows are more meaningful than generic satisfaction scores. These chatbot evaluation metrics should be reviewed weekly during early deployment and later incorporated into standard operational reviews.

Knowledge lifecycle management

A chatbot’s reliability depends on the quality of the information it uses. Even a strong model produces weak output if the knowledge base is incomplete, outdated, or poorly structured. Knowledge management, therefore, becomes a continuous operational responsibility, not a one-time setup task.

Indexing begins with selecting the right sources.

Updating happens as reality changes. New features launch, processes shift, pricing changes, and internal terminology evolves.

Archiving ensures that outdated guidance does not remain accessible.

How to manage risks when designing enterprise chatbot strategy

Data leakage

Chatbots often act as a front door to data that would otherwise be accessed only through authenticated systems. If the model, the retrieval layer, or the integration layer is not well-controlled, sensitive records may appear in responses, be logged unintentionally, or move into third-party systems with weaker governance.

Mitigation begins with restricting what the system can see, not just what it can say. Access to internal data sources should be filtered through a retrieval layer designed for least-privilege access. Sensitive data must be masked before reaching the model. Audit logs should be retained for a defined period (commonly 12–24 months) to support internal reviews and regulatory requirements. The principle is straightforward: the chatbot should only access information that the end user is already entitled to see.

Reliability of responses

Large language models will generate an answer when asked, even when the correct answer is “not known” or “not available.” The risk arises when output is accepted as fact without verification. In customer support, this can lead to misinformation about products or processes. A structured approach to grounding responses is required. Retrieval-augmented generation, controlled response templates, and answerability checks reduce the likelihood of fabricated content.

Fragmented deployments

As conversational and agentic tooling becomes easier to configure, there is a real risk that teams will create parallel chatbots and micro-agents without oversight. These duplications are rarely intentional; they arise when departments solve immediate problems. Over time, this leads to inconsistent answers to the same question, differing data sources, conflicting policy interpretations, and unclear ownership.

Preventing this requires more than a central policy document. It requires a clear operating model: who is allowed to propose new conversational workflows, how they are reviewed, how training data is shared, and how decommissioning happens.

Response planning

No matter how well-designed the system is, there must be a defined procedure for stopping or reversing a deployment when something behaves in an unexpected or harmful way. A kill-switch is not dramatic; it is a standard operational safety measure. The ability to immediately disable an agent or revert to a previous configuration reduces risk far more effectively than attempting to monitor and correct behavior in motion.

Rollback should be treated as a controlled release process. Each new version of prompts, workflows, or retrieval logic should be deployed with the option to revert without downtime. Monitoring should track not only performance metrics but also anomaly signals such as sudden escalation rates, unexpected response patterns, or queries that fall outside the intended domain.

How chatbots perform in real workflows: Practical examples from Acropolium

Real progress with chatbots usually begins in specific, high-volume workflows where the operational cost of human handling is significant and information flows are repeatable. The following examples illustrate how we approached it.

Construction & field operations

A global construction and facility maintenance provider managed a large fleet of equipment across multiple locations. Asset condition data was fragmented across spreadsheets, phone calls, and manual reports, resulting in delays, duplicate work orders, and reactive maintenance.

The organization needed a conversational interface that would allow field teams to report issues, retrieve equipment maintenance histories, and request service without navigating complex internal systems. Consistency and auditability were essential because maintenance decisions directly affected project schedules and safety compliance.

A chatbot was integrated with the company’s asset management database and service ticketing workflow. The system was designed to:

Accept structured issue reports from field workers via mobile chat

Retrieve equipment history and repair records on request

Automatically generate and route maintenance tickets to the appropriate department

Log conversations and ticket context for compliance review

Within the first six months, equipment issue reporting time decreased by 38%, while duplicate service tickets fell by 22% due to standardized reporting inputs. Field teams spent less time searching for status information, and maintenance planners gained clearer visibility into recurring equipment failures. The measurable gain was higher operational clarity and fewer costly delays caused by missing information.

Customer support & live chat optimization

A software company with a high volume of inbound support relied heavily on human agents to handle repetitive requests, such as login issues, account access, feature instructions, and basic troubleshooting. The average handling time for these routine cases was high relative to complexity.

The goal was not replacement, but more effective distribution of support work. The company needed a chatbot that could reliably resolve predictable requests, while handing complex issues to agents with full context to avoid repetition and frustration.

The chatbot was embedded into the live chat interface and integrated with the knowledge base and authentication services. Key design decisions included:

Retrieval-based responses for accuracy and consistency

Escalation rules with conversation transcripts for seamless handover

Continuous monitoring of fallback cases to refine intents and content

Within three months, the chatbot handled approximately 42% of inbound chat sessions without requiring human intervention. The average handling time for the remaining agent-handled cases decreased by 17% because agents received a complete conversation context and did not need to re-ask troubleshooting questions.

Restaurant & hospitality service interactions

A mid-sized global restaurant chain was receiving high message volume across multiple regional channels, online menu inquiries, order status checks, reservation requests, and promotional questions. The company needed a conversational system that could provide consistent, brand-aligned responses in multiple languages, while integrating with ordering and reservation systems. A significant requirement was limiting hallucination risk; menu items, pricing, and opening hours needed to be presented as defined. A domain-controlled chatbot was deployed with the following configuration:

Structured menu and restaurant data stored in a central knowledge index

Integration with POS and reservation APIs for real-time availability

Guardrails to ensure the system only responds using source-of-truth data

Escalation to staff only for custom dietary or event-related requests

Response time for customer messages decreased from an average of 12 minutes to under 30 seconds. Human staff involvement in routine interactions dropped by 55%, allowing teams to focus on kitchen and dine-in service rather than chat handling.

Get started with a chatbot strategy for your enterprise

The priority for chatbots now is to make systems dependable, measurable, and aligned with how the organization actually works. In practice, this means being intentional about what the chatbot is expected to achieve, preparing the data and knowledge it relies on, and choosing an architecture that can adapt over time rather than locking into a single provider. Governance matters just as much as performance. When evaluation, review, and decision-making processes are precise, chatbots become easier to maintain, improve, and trust.

If your pilot works, but scaling still feels uncertain, that is a sign that the strategy and operating model need to be clarified before expanding. Whether you’re at the stage where scaling feels possible but not straightforward, we can help you think it through. Contact Acropolium to schedule a session. Let’s define a path that supports everyday work, earns user trust, and remains manageable over time.

![AI in Retail: [Use Cases & Applications for 2025]](/img/articles/ai-in-retail-use-cases/img01.jpg)

![AI in Web Development in 2025: [Benefits, Trends & Use Cases]](/img/articles/ai-and-web-development-why-and-how-to-leverage-ai-for-digital-solutions/img01.jpg)

![6 AI Use Cases in Education in 2025: [Benefits & Applications]](/img/articles/6-ai-use-cases-in-education-transforming-the-learning-experience/img01.jpg)