- Custom Software Development

- Bespoke software

- Cloud solutions

- Automation

- ML & AI

Development of a quality monitoring tool and data quality software for automated processing of large chunks of sensitive data with real-time monitoring capabilities. AI-powered data quality improvement for reliable collection of data and data-driven insights with enhanced processing quality through automation of manual entries.

client

NDA

Estonia

50-100 employees

Our client is a trusted partner for entrepreneurs seeking cutting-edge finance, investment, and data management solutions. As a leading player in the fintech industry, the company handles various data sources, encompassing customer transactions, market data, and internal operations.

request background

Automated Data Profiling Software & Data Quality Tool Development

Having faced major challenges concerning data quality, collection, and processing time, the company stepped toward an automated data profiling solution to remain competitive.

The inconsistent and inaccurate data derived from various sources lead to data quality issues, compromising the reliability of analytical insights. Thus, the company had to turn to traditional methods of manual profiling. Not only did the approach prove time-consuming and error-prone, but it also slowed down the overall data ingestion pipeline.

For that reason, our client decided to leverage automated data profiling, requesting tool development from scratch. The tool also had to serve as data quality software. It was supposed to automatically collect, categorize, and allocate data while ensuring its quality and potential for valuable and consistent insights.

challenge

A Scalable Data Profiling Tool Eliminating Manual Processing through AI Automation

Our client reported regular bottlenecks and delays in data processing at times when incoming data volumes grew. Consequently, the company had to invest more financial resources to overcome disruptions immediately. At this point, we realized that the solution has to be scalable and flexible, aiming to deal with fluctuating amounts of data.

Being both a data quality tool and a data profiling tool, the product required cohesively cooperating AI algorithms for sorting data and extracting insights. Since the need for manual effort primarily caused the delays, our team had to provide comprehensive automation for all the datasets across the entire data handling cycle. Delayed data availability and inconsistent quality significantly impacted the client’s analytics clarity and overall operational costs.

goals

- Improve data quality by leveraging AI-automated data profiling, significantly enhancing incoming data's accuracy, consistency, and reliability.

- Optimize the data ingestion process, streamlining the pipeline for more efficient data processing and assimilation from diverse sources.

- Implement real-time quality monitoring features to promptly identify and address data quality issues, ensuring the data quality software prevents the spread of inaccurate data.

- Provide seamless scalability of the integrated AI data profiling solution to handle increasing data volumes while maintaining performance and efficiency.

- Reduce costs associated with data management by minimizing manual efforts in data profiling and mitigating the impact of poor data quality.

solution

Artificial Intelligence Data Profiling Solution and Data Quality Monitoring Tool

Apache Spark, Apache NiFi, AWS, Tableau, Power BI, DBSCAN, SVM

11 months

7 specialists

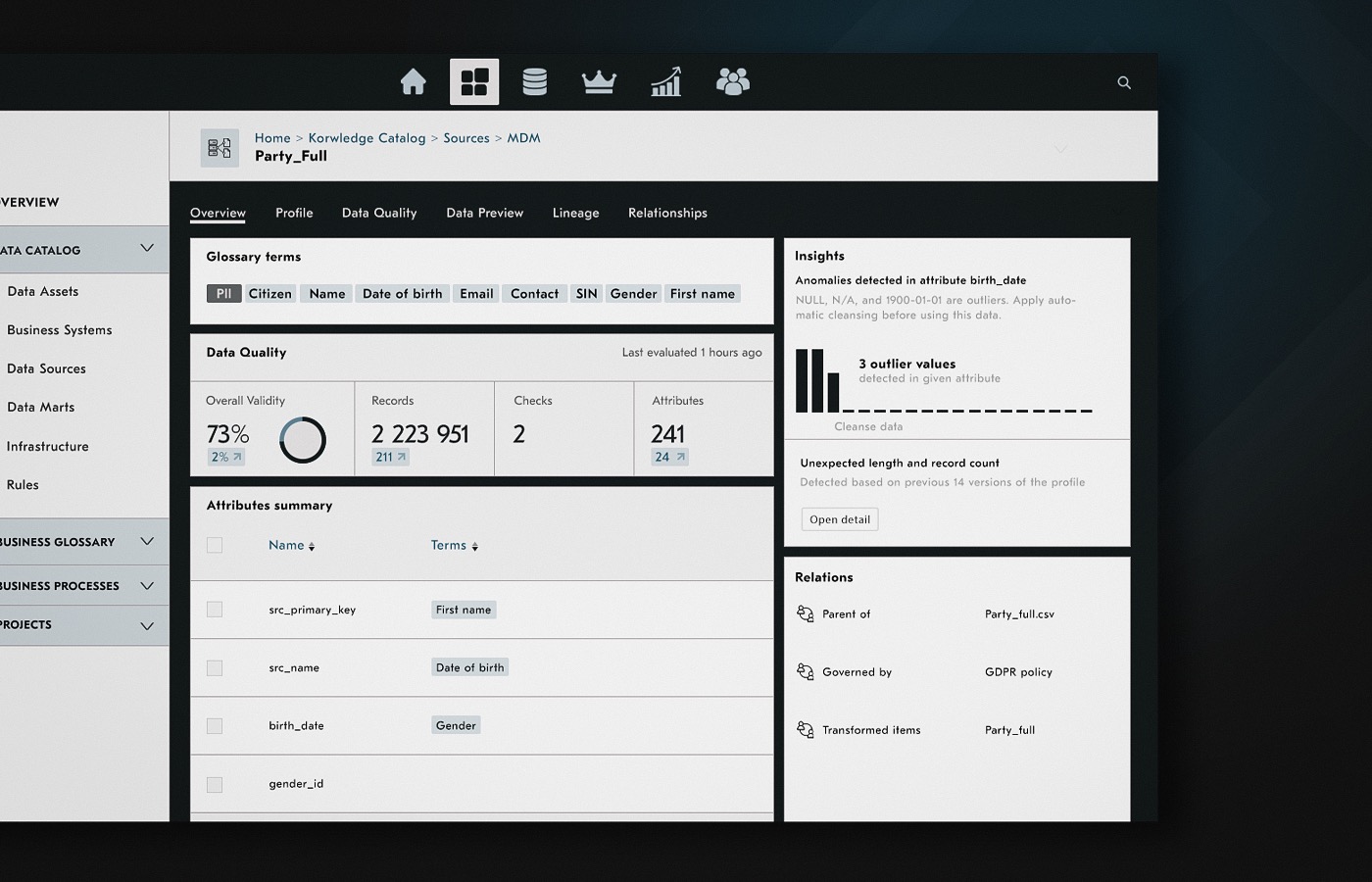

The Acropolium team developed an AI-powered automated data profiling tool to address the above mentioned challenges. Firstly, our developers evaluated the existing data collection patterns to identify the algorithms of artificial intelligence the data profiling solution required.

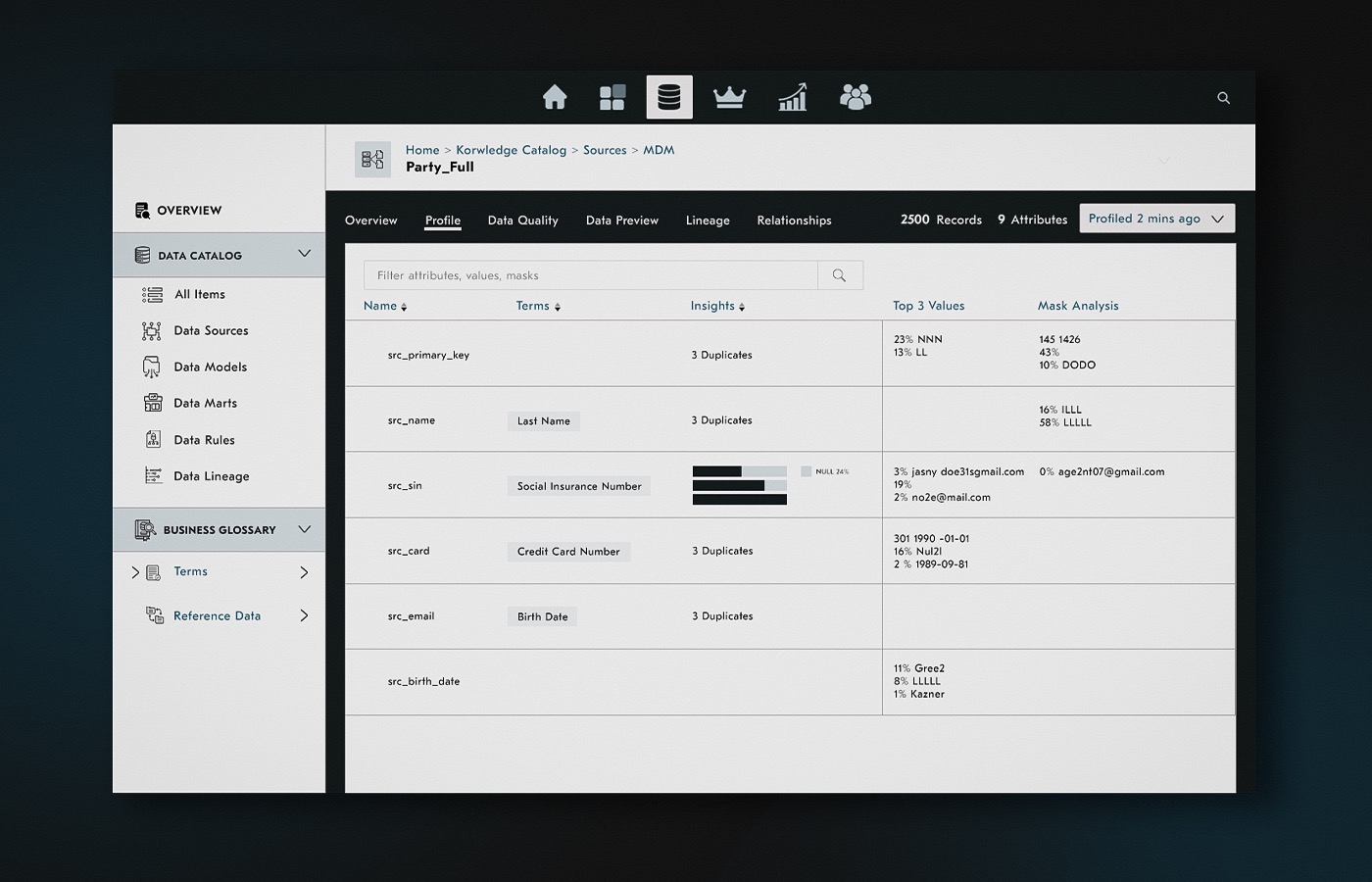

We employed advanced machine learning data classification and clustering algorithms to automatically analyze and comprehend incoming data. Thus, the data profiling tool could independently recognize data types, patterns, and anomalies.

This way, the product offers a comprehensive overview of incoming data. As a result, automation reduced reliance on manual profiling and expedited the data profiling process.

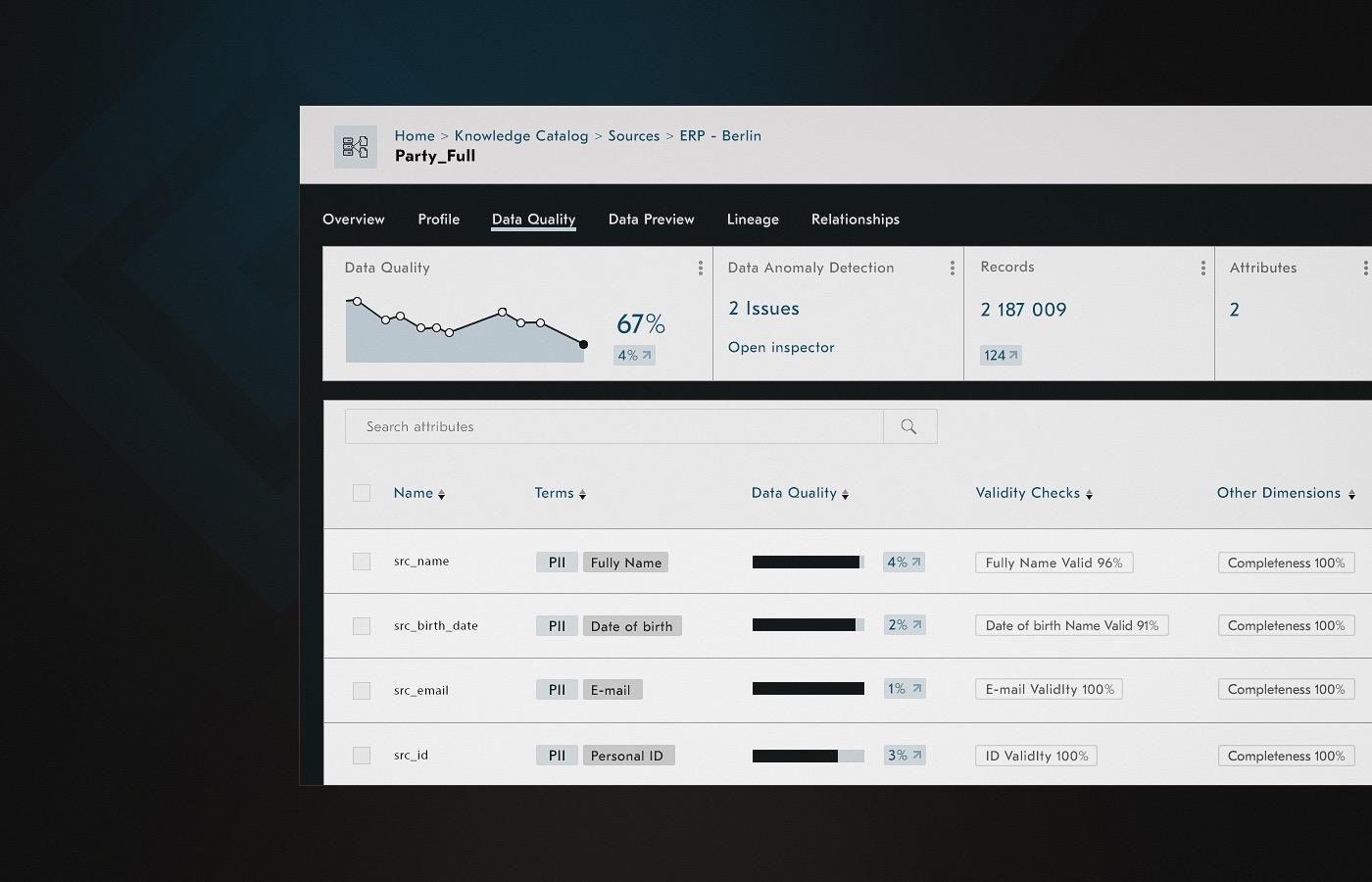

Paying close attention to real-time data quality monitoring, the development team established standardized and recurring processes and frameworks. This methodology enabled the identification of data quality issues and monitoring them through dashboards. It also can automatically configure alerts to notify of any alterations the moment they occur.

We ensured that the overall design ensured the system's capacity to accommodate future growth and fluctuations in data inflow. The data profiling and data quality tool was structured for horizontal scalability. That solution enables the client to manage increasing data volumes seamlessly without compromising performance.

- Integration of real-time data quality monitoring tool capabilities allowed the immediate identification and flagging of data quality issues as they arose.

- The proactive ML-based approach facilitated prompt corrective actions, preventing the spread of inaccurate data throughout the system.

- As part of the scalable data quality tool and profiling software architecture, we included customizable features allowing the client to modify incorrect data detection settings. The product enables users to filter data in progress, preview rules, and conduct backtesting for configuring criteria.

outcome

Data quality automation converts to operational efficiency and cost savings

- The percentage of data errors and inconsistencies was reduced by 40%.

- The final data quality rate reached 95%.

- Overall, data processing time was reduced by 30%, bringing the average processing time down to 8 hours. In contrast, the initial processing would take 12 hours per 1 terabyte dataset.

- Real-time monitoring lowered the time to identify and address data quality issues to less than 1 hour.

- The client successfully processes and ingests up to 30 terabytes of data per day, demonstrating a 200% improvement in scalability.

- The confidence level increased by 25%, resulting in an average confidence level of 95% in data-driven decision-making.